*This article is based on Hailo AI Software Suite 2024-04.

Introduction

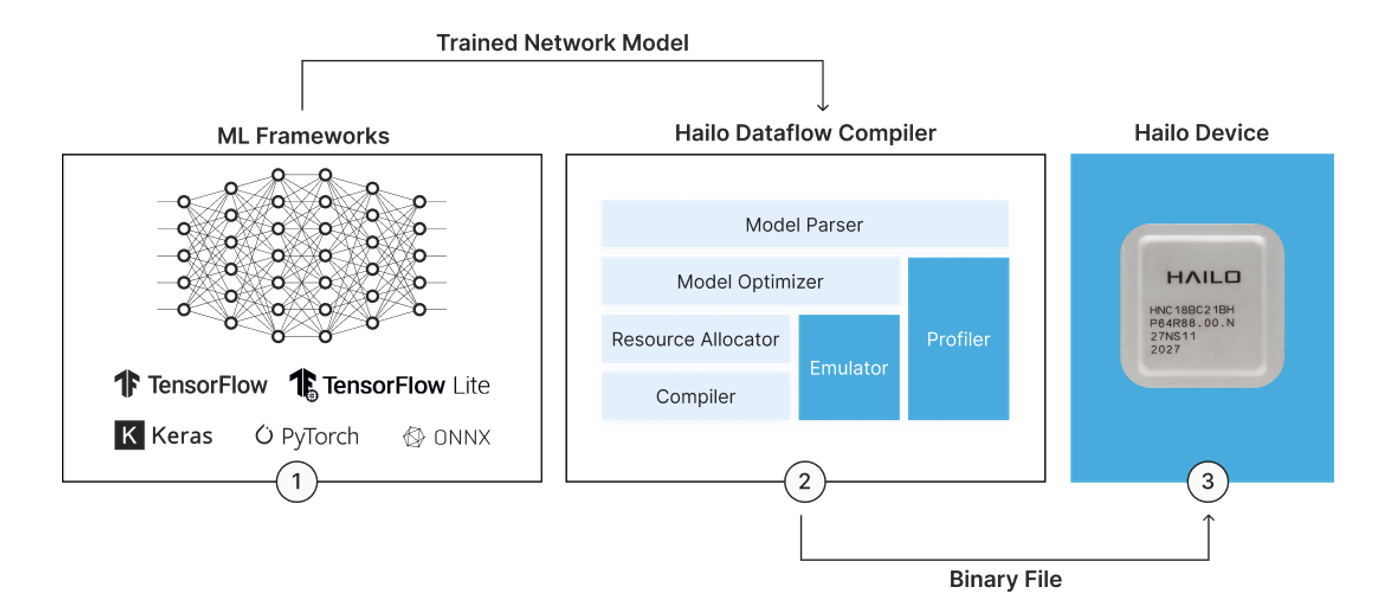

The Dataflow Compiler is part of the AI Software Suite and is a tool for optimizing trained network models, such as onnx files, for Hailo devices.

Please refer to the following link for instructions on how to install AI Software Suite.

①Hardware and installation requirements for AI Software Suite

There are three main stages: Parser, Optimizer, and Allocator/Compiler. Jupyter notebooks are provided for each stage as tutorials, so in this article we will explain those tutorials.

Of course, you can customize this tutorial for your own design, but I think it would be more convenient to put these contents together as a Python script. I have also published an article explaining how to use the Python script, so please check that out too.

[Tried it] I tried running the open source ONNX model with Hailo

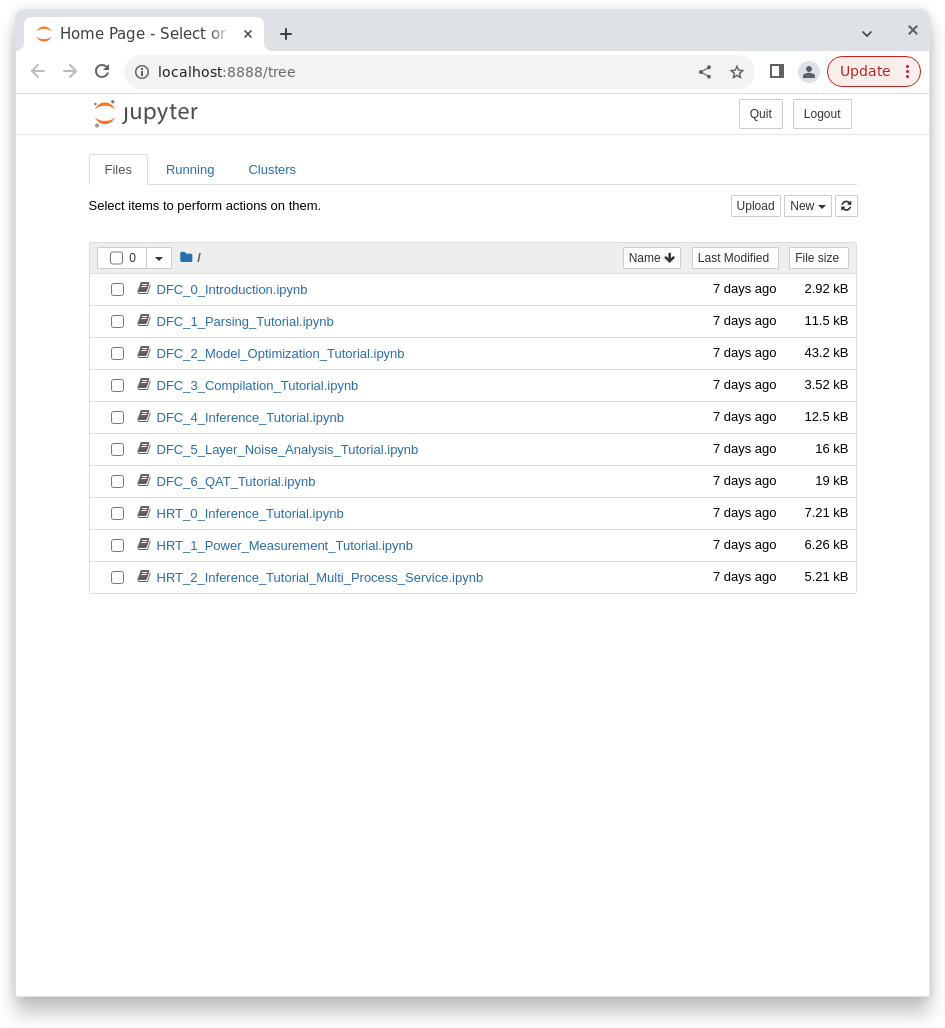

To start the tutorial, run the following command in AI Software Suite:

$ hailo tutorial

Jupyter Notebook will open in your browser.

In the next section, we will explain Parser, Optimizer, and Allocator/Compiler.

Each corresponds to the following notebook:

Parser --- DFC_1_Parsing_Tutorial.ipynb

Optimizer --- DFC_2_Model_Optimization_Tutorial.ipynb

Allocator/Compiler --- DFC_3_Compilation_Tutorial.ipynb

Parser

Click DFC_1_Parsing_Tutorial.ipynb to open the notebook for the parser.

There are explanations in the notebook, but I will explain each cell here.

Notebook explanation

Library calls for the Dataflow Compiler.

This describes the model to be converted.

model_name: Any name you like

ckpt_path: The path to the AI model (in this case, the ckpt file)

start_node: Specifies the start of the node to be offloaded to Hailo

end_node: Specifies the end of the node to be offloaded to Hailo

This means optimizing from start_node to end_node.

chosen_hw_arch: Specify the target HW

This is the cell that is actually being parsed.

Convert the ckpt file to Hailo's original format.

Running this cell is not required, but it will create a HAR file (Hailo ARchive file).

By creating an archive file that saves the state up to this point, you can execute subsequent tasks from the archive file without having to parse again.

In this tutorial, the save destination will be the following folder:

/local/workspace/hailo_virtualenv/lib/python3.8/site-packages/hailo_tutorials/notebooks/resnet_v1_18_hailo_model.har

By the way, this HAR file can also be viewed with Netron.

Running this cell is also optional.

The state after parsing is visualized, so you can check whether there are any differences with the input model.

Parsing is now complete.

The parsing notebook will continue below, but it will cover how to convert from other formats (such as onnx files and pb files) and how to convert to Tensorflow Lite, so please refer to it as necessary.

Tips

The most common cause of errors when parsing is "using a function that Hailo does not support" or "using a supported function in an unsupported way."

Regarding "using functions not supported by Hailo," please refer to the Hailo Dataflow Compiler User Guide for supported functions. If you have an unsupported function, please replace it or contact us and Hailo will add it in the future.

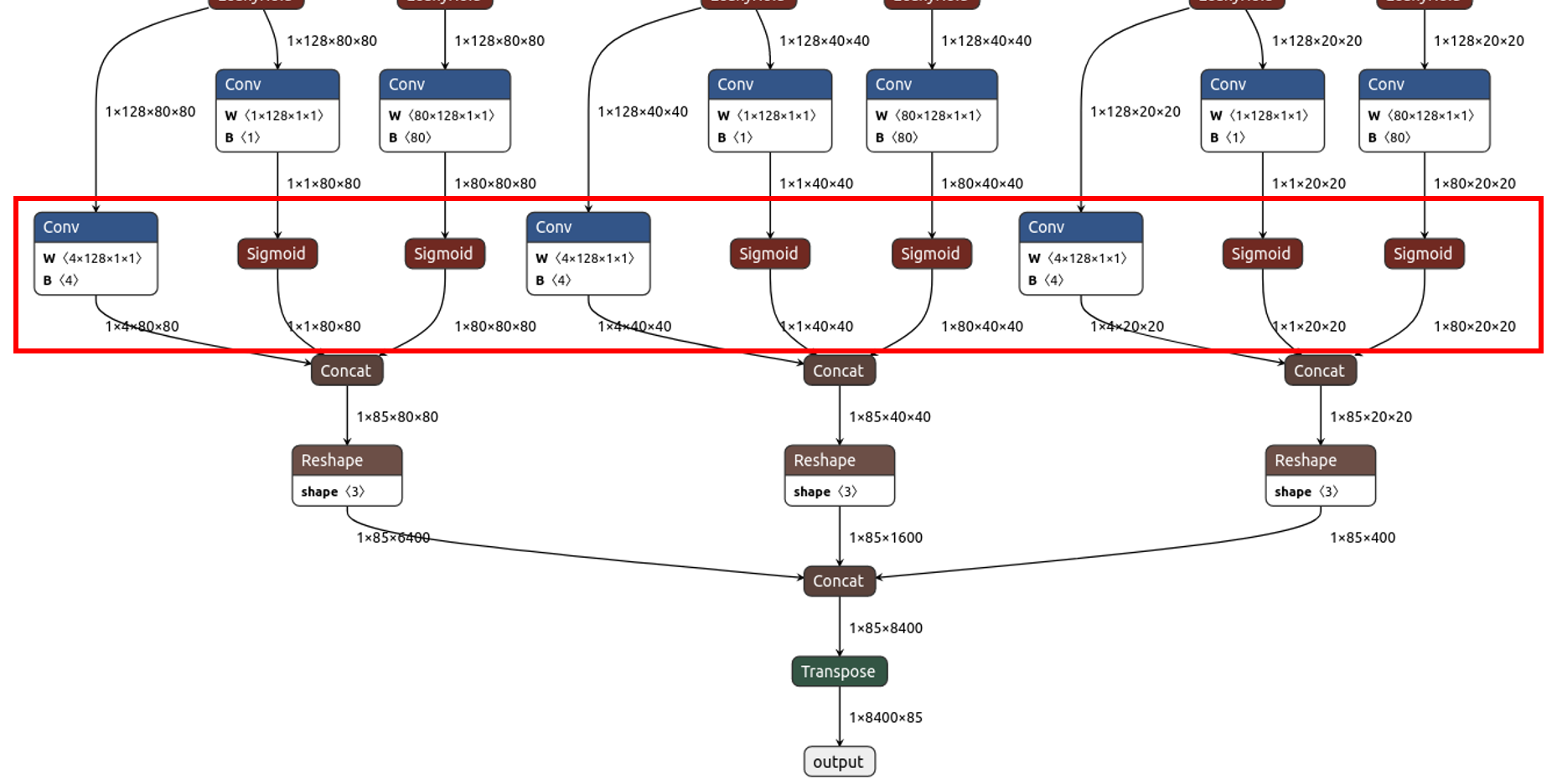

Regarding "using a supported function in an incompatible way," the usage/restrictions of each function are described in the Hailo Dataflow Compiler User Guide. A particularly common case is when you use Reshape or similar at the end of each AI model to create a single output. Below is the final stage of yolox_s, and the output is also summarized here.

Although Reshape itself is supported, the Hailo Dataflow Compiler User Guide states "Between Conv and Dense layers (in both directions)", and its use in the final stage above is not supported. In this case, an error will occur, but you can avoid the error by specifying the area enclosed in the red frame above as the end_node.

Optimizer

DFC_2_Model_Optimization_Tutorial.ipynb is the notebook for the Optimizer.

This time we will only explain the Quick Optimization Tutorial.

Notebook explanation

This declares the required libraries.

The Optimizer performs optimization including quantization, so calibration data is required. Typically, you will use images from the dataset used for training.

In this cell, the data is resized and cropped to fit the model and prepared as calib_dataset.

In this cell, we load the HAR file created in the Parser section and instantiate it.

This is where we actually perform optimization, including quantization.

runner.load_model_script (alls) loads the settings described in alls. Here, batch normalization is added.

After that, we perform optimization using the calibration data created by runner.optimize (calib_dataset).

Then save it as a HAR file.

This completes the optimization process.

The following cells are useful for tuning the accuracy. Please also refer to the following notebook.

DFC_5_Layer_Noise_Analysis_Tutorial.ipynb

DFC_6_QAT_Tutorial.ipynb

Tips

The important point in the Optimizer is accuracy. Since quantization is performed, some degradation in accuracy occurs, but there are various techniques to minimize this.

If you are not satisfied with the accuracy, please refer to the "Debugging Accuracy" section of the Hailo Dataflow Compiler User Guide. There are various points to review.

As for the contents, I think adding batch normalization, which was also mentioned in the tutorial, is effective. The results will also change depending on the optimization level and compression level, so please refer to the User Guide. You can also convert to 16-bit, although this will sacrifice processing speed.

The Oprimizer notebook introduces a wide range of accuracy improvement techniques, so please use it to tune your accuracy.

DFC_2_Model_Optimization_Tutorial.ipynb

DFC_5_Layer_Noise_Analysis_Tutorial.ipynb

DFC_6_QAT_Tutorial.ipynb

Allocator/Compiler

DFC_3_Compilation_Tutorial.ipynb is the notebook for Allocator/Compiler.

Notebook explanation

Declare the library, include the post-Optimizer HAR file, and instantiate it.

Now we will compile and create a hef file (Hailo Executable binary File).

Once the hef file is complete, the Dataflow Compiler's role is complete.

There is also a function to output a result report file, so we will introduce that as well.

You can create a Profiler Report by running this cell.

The output will be an HTML file like the one below, which contains useful information ranging from an overview of the model to detailed information on each layer, so please take a look.

Tips

The key point of a compiler is processing speed (performance).

Since the presence or absence of Context Switching affects performance, keeping the Context to 1 will give the best performance.

The number of contexts is also displayed in the report file above as "# of Contexts" (1 in the above).

※Context Switch

This technique involves splitting the model (dividing it into Contexts) and loading each Context onto the device in turn for processing.

While it can handle large models, the overhead of loading the Context will reduce performance.

(If the Context is 1, loading will only be done once at the beginning, but if there are multiple Contexts, they will be swapped each time.)

All processing is done automatically by HailoRT, so users do not need to be aware of it.

The following settings will be effective for compilation, so if you are not satisfied with the performance, please try them out referring to the Hailo Dataflow Compiler User Guide.

resources_param

performance_param

optimization_level/compression_level

That's how to use the Dataflow Compiler.

Please refer to the following link for information on running inference using the hef file.

Inquiry

If you have any questions about this article, please contact us using the form below.

Hailo manufacturer information Top

If you would like to return to the Hailo manufacturer information top page, please click below.