*This article is based on Hailo AI Software Suite 2024-04.

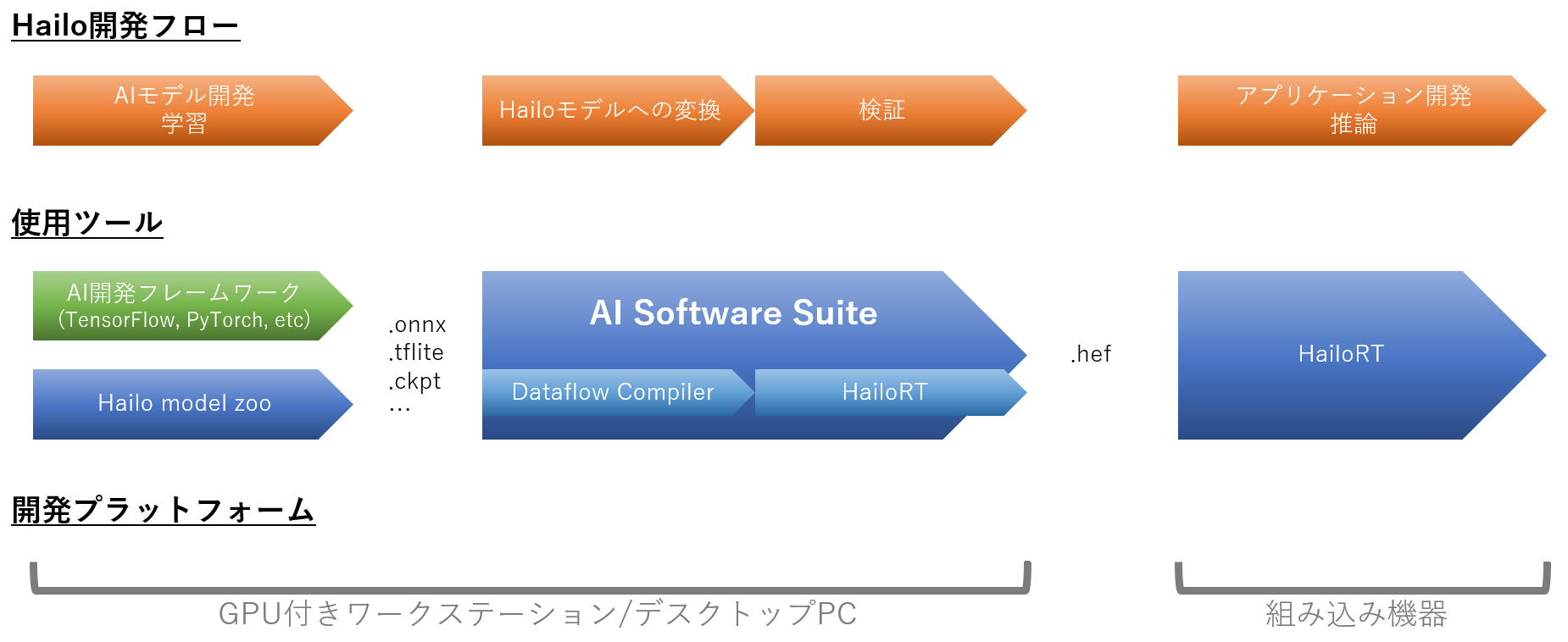

Hailo Development Flow

AI development involves the development of the AI model itself (graph construction, learning), but Hailo is not involved in that part. The key is how efficiently the developed AI model can be executed on Hailo's hardware, so Hailo's development flow begins when the AI model is received. Development will generally proceed as follows, using tools provided by Hailo.

As described above, the model trained on the AI development framework is converted to an ONNX file and passed to the AI Software Suite. The AI Software Suite (actually the Dataflow Compiler) converts the ONNX file into a Hailo-optimized hef file (Hailo Executable binary file). The hef file is then copied to the embedded device and inference is performed using the HailoRT API.

In addition, in many cases, you may want to use a public model and retrain it rather than developing an AI model from scratch. The Hailo model zoo has public models that have proven successful at Hailo available for you to choose from.

This article mainly explains how to install and use the AI Software Suite in the following order. Click on each link to check the details.

①Hardware and installation requirements for AI Software Suite

②How to use Dataflow Compiler (how to create hef files)

③How to use HailoRT (running inference using hef files)

For your reference, we have provided below an explanation of each tool provided by Hailo.

Hailo Tools Overview

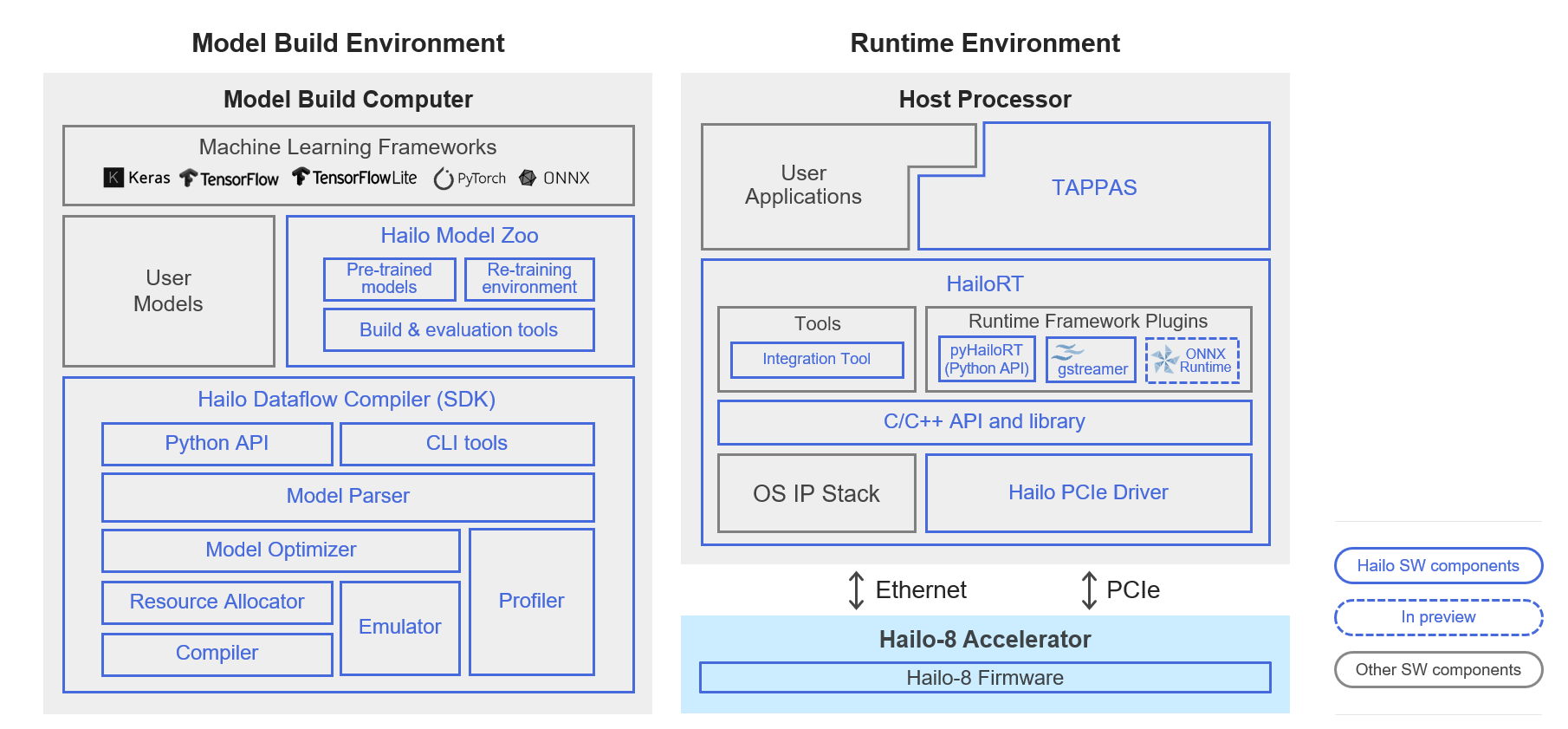

The tools provided by Hailo consist of the following:

The left side (Model Build Environment) is the development tool for creating hef files, and the right side (Runtime Environment) is the inference environment.

The AI Software Suite is a tool that integrates the following tools: Hailo model zoo, Hailo Dataflow Compiler, HailoRT, and Tapas.

Hailo Model Zoo

This is a repository of public models compiled for each Hailo product.

It's available on GitHub, and the link is below:

https://github.com/hailo-ai/hailo_model_zoo

If you haven't decided which model to use, you can choose one from here and try it out without any compilation errors and with guaranteed performance/accuracy.

You can also trace various functions of the Dataflow Compiler using the hailomz command, and some models also support retraining.

Hailo Dataflow Compiler

This is a tool that converts ONNX files and other files into hef files, which are Hailo's executable format.

Various optimization/quantization options can be used to improve performance/accuracy, and the Profiler Report allows you to quickly check fps, latency, etc.

HailoRT

By inserting it into an inference device (embedded device) that implements Hailo hardware, it becomes a runtime tool that interacts with the actual Hailo device.

The CPU is x86/ARM (aarch64) and the OS is Linux/Windows compatible, making it compatible with a wide range of embedded devices.

This is an open source project available on GitHub.

Tappas

This is a demo application of EtoE that uses a pre-trained public model.

It is based on Gstreamer and can be customized by replacing models, etc.

Tappas is also released as open source.

https://github.com/hailo-ai/tappas

Inquiry

If you have any questions about this article, please contact us using the form below.

Hailo manufacturer information Top

If you would like to return to the Hailo manufacturer information top page, please click below.