What is Run:ai

Founded in 2018 in Israel, Run:ai is an MLOps technology company that aims to provide the foundation for AI infrastructure and accelerate innovation in the AI era for organizations in all industries. We have a large number of cloud-native technology experts centered on Kubernetes, own many unique IPs, and have extensive experience in building large-scale GPU clusters. It is one of NVIDIA's DGX certified software companies and an NVIDIA Premier Inception Partner, and is being widely adopted in various AI markets (healthcare, autonomous driving, defense, energy, and retail).

Challenges in corporate AI development

As an infrastructure for AI development, an increasing number of companies have prepared GPUs as computing resources and introduced Kubernetes, the de facto standard, as container orchestration for efficient container management. AI engineers involved in model development face the following challenges.

Infrastructure silos and underutilization of GPU resources

GPUs are dedicated to each department or individual, and computational resources cannot be shared, forcing inefficient work.

Delays in the AI development cycle

Different stages of AI development, such as model building, training, and production, require different GPU resources. it will cause delays.

Knowledge acquisition of Kubernetes

For developers who have no experience with container orchestration or Kubernetes, in order to proceed with the construction of an AI development environment, it takes time to acquire knowledge and construction know-how about many software including Kubernetes.

Run:ai Atlas Platform Solves

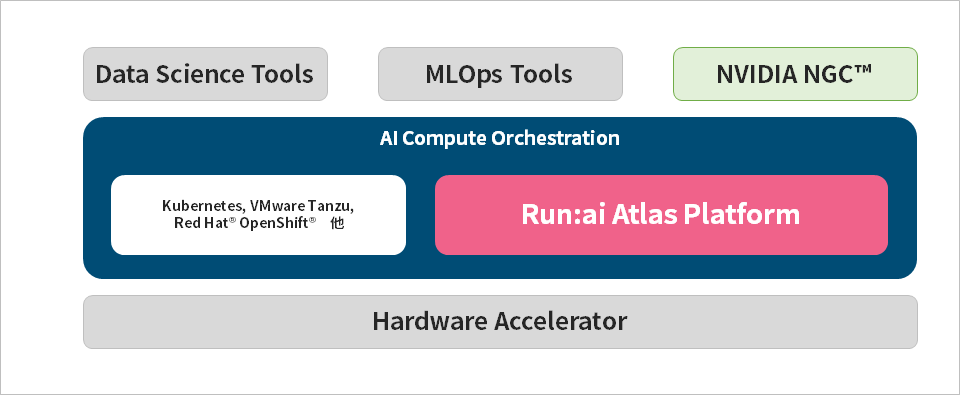

The Atlas Platform provided by Run:ai is a platform that enables dynamic and automatic sharing of GPU resources between teams, and is provided as a Kubernetes plug-in (software).

Full utilization of GPU resources across the organization

It creates a shared pool of multiple GPUs and provides an environment in which computational resources can be fully utilized across multiple organizations and projects. You can also deploy multiple containers and VMs on a single GPU, maximizing GPU utilization and dramatically impacting your company's ROI.

Fully automatic GPU resource allocation based on priority

Always prioritize mission-critical workloads and automatically preempt other jobs to schedule GPU resources on the fly. In addition, computational resources can be efficiently operated by the team.

Add-on to your existing Kubernetes environment to add rich functionality

An abstraction layer based on Run:ai's proprietary technology allows users to add functions such as a scheduler and centralized management user interface without requiring knowledge of Kubernetes on the user side, allowing users to focus on their own AI development. can do.

Click here for detailed information

Contact Us

Macnica provides MLOps solutions centered on NVIDIA products, and has many achievements in building AI infrastructure for companies.

If you are interested in AI development issues and the Run:ai Atlas Platform that solves them, please feel free to contact us.

AI TRY NOW PROGRAM

This is a support program that allows you to test the latest AI solutions on the NVIDIA development environment before introducing them into your company.

You can deepen your understanding of software products such as NVIDIA AI Enterprise and NVIDIA Omniverse and investigate the feasibility of your implementation objectives in advance.