Introduction

"I measured the benchmark of YOLOv3 using the 11th generation Intel® Core™ processor! (Results)" is a continuation of the preparation.

For the preparation version, please check the URL link below.

https://www.macnica.co.jp/business/semiconductor/articles/intel/137003/

Now, let's report on the measurement method and results!

Yolo-v3 Benchmark Measurement Method and Results Using 11th Generation Intel® Core™ Processors

Operating procedure

Intel® Official Site Than, intel® OpenVINO™tool kit Download and install.

I used the following link for installation. Thank you to those who created this article. I was able to take it easy while thinking about it!

How to Run OpenVINO™ on Mustang-F100 (Card with Intel® Arria® 10 FPGA)

You can check if the installation is working properly by running the following command!

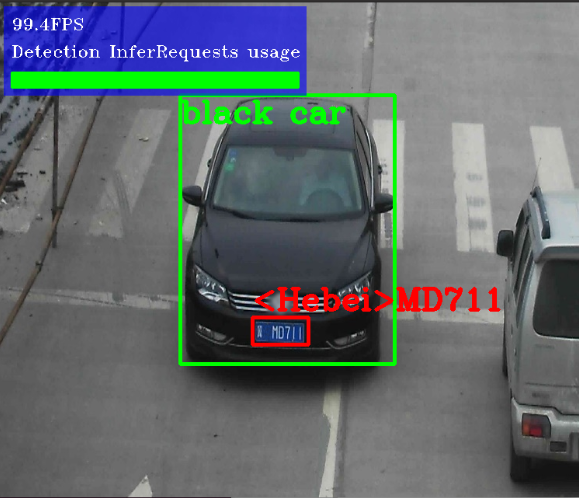

If you can identify the image of the car as shown below, intel® OpenVINO™tool kit is installed.

$ cd /opt/intel/openvino_2021/deployment_tools/demo $ ./demo_squeezenet_download_convert_run.sh $ ./demo_security_barrier_camera.sh

Next, run the FP32 and INT8 models of yolo-v3-tf prepared by us and check the performance.

For information on how to convert to FP32 and INT8 models, please refer to the reference links in the supplement below!

./benchmark_app -m FP32/yolo-v3-ft.xml ./benchmark_app -m FP32/yolo-v3-ft.xml -d GPU ./benchmark_app -m INT8/yolo-v3-ft.xml ./benchmark_app -m INT8/yolo-v3-ft.xml -d GPUAdditional Notes on Converting to FP32 and INT8 Models

Regarding the conversion to FP32 and INT8 models, the following link was very helpful, so I will report it without hesitation.

Convert YOLOv3 to IR format used by OpenVINO™ toolkit

Tried public model quantization (INT8) from OpenVINO™ toolkit Open Model Zoo

*It is a secret only here that the actual model conversion work was done by seniors when writing the article.

Supplement about the above command

There is a description of "benchmark_app", but this is a tool that executes inference that is installed together when you install the Intel®OpenVINO™toolkit.

Specify the file path of the network model after"-m".You can specify the target device to be inferred with "-d".

Commands with "-d GPU" use the GPU. Commands without "-d GPU" are using CPU.

Performance confirmation/results

This is my honest impression that I finally made it this far, but looking back, I learned the basics on my own and was able to get there on my own while looking at some links, soeven beginners can use it! That's my impression.

So, I immediately checked the performance of yolo-v3-tf 's FP32 and INT8 on both CPU and GPU!

The result is as follows.

As a consideration from the results, we were able to obtain more than twice the FPS for both FP32 and INT8 compared to the CPU alone when using the GPU.

Also, when comparing FP32 and INT8, INT8 is more quantized, so we were able to get more throughput.

- yolo-v3-tf performance with CPU

|

FP32 |

INT8 |

|

|

Throughput (FPS) |

6.28 |

23.89 |

|

Latency (ms) |

688.35 |

180.36 |

- yolo-v3-tf performance with GPU

|

FP32 |

INT8 |

|

|

Throughput (FPS) |

15.42 |

59.25 |

|

Latency (ms) |

263.63 |

67.69 |

Summary

We confirmed the performance of yolo-v3-tf 's FP32 and INT 8 using ADLINK's cExpress-TL with Core i7-1185G7, an 11th generation Intel® Core™ processor.

We have confirmed that INT 8 has 3-4 times better throughput than FP32 for both CPU and GPU.

In addition, I was able to learn the work necessary for actual design, such as how to convert yolo-v3-tf to FP32 and how to quantize to INT8 when obtaining results.

Thank you very much for reading from the preparation version!

I worked from a place where I didn't know anything, but as I progressed, I was able to learn various things from the existing information and the accumulation of information that was constantly updated.

I strongly feel that it has become relatively easy for even a beginner like me to experience AI, and my interest in AI has rekindled. I wish I could be. If you have any questions or concerns, please feel free to contact us using the contact button below.