Many-core MPPA® achieves high processing performance and low power consumption

Solutions for automated driving

Many unexpected events occur on real roads.

Therefore, autonomous driving cars need the ability to perform a lot of image processing and AI processing to support them.

The many-core processor MPPA® is an ideal processor for the main ECU of autonomous driving cars that can perform such high-load arithmetic processing with low power consumption.

- Real-time processing realized by low-latency inter-core communication technology

- Achieves low power consumption with high computing performance equivalent to GPU

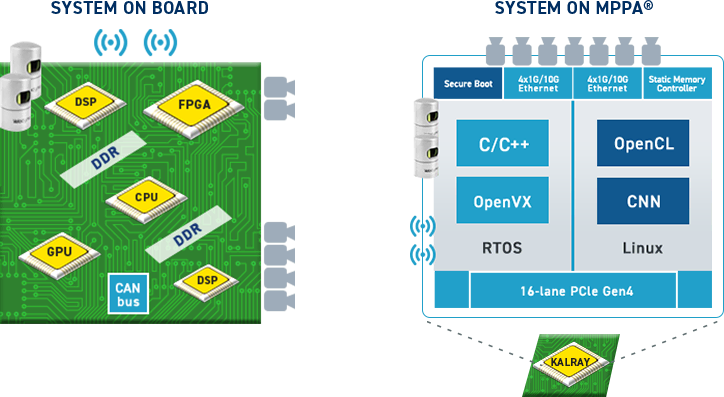

- Software development in standard languages (C/C++/Open CL)

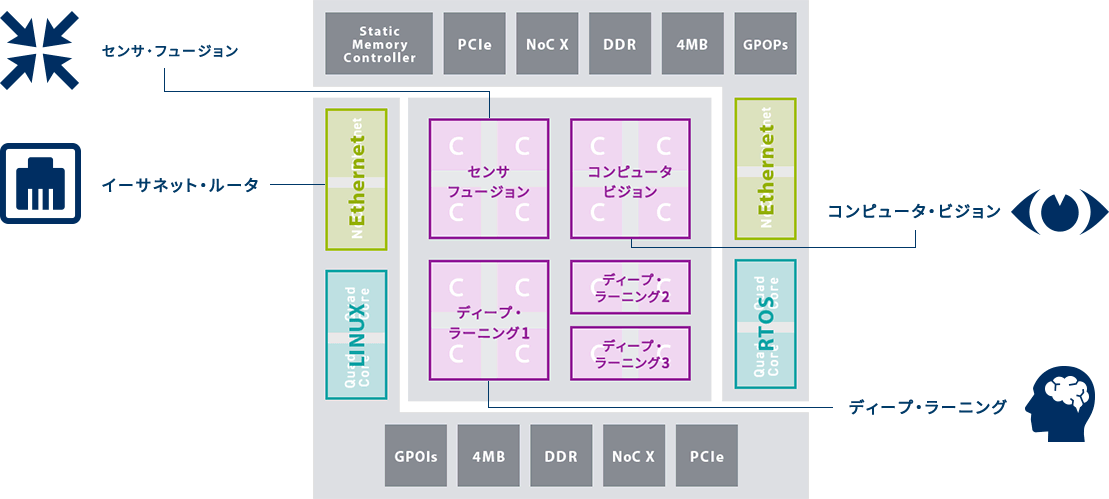

- Different applications (DNN/CV, etc.) can be executed simultaneously for each cluster

- Provides tools for neural network implementation (supports Caffe/TensorFlow)

many core processor

・5 operation clusters

・80-160 CPU cores

・Two I/O clusters

Quad-core CPU, DDR3, 4Ethernet 10G, x8 PCIe Gen3

・Data/control network on chip (NoC)

・1 TFLOPS SP

compute cluster

・16 64bit user cores

・16 coprocessors

・NoC Tx and Rx interface

・Debug & Support Unit (DSU)

・4MB multi-bank shared memory

・614GB/s shared memory bandwidth

・16-core SMP system

VLIW core

・32-bit/64-bit address

・VLIW architecture with simultaneous 5-instruction issuance

・MMU + I&D cache (8KB + 8KB)

・32-bit/64-bit IEEE754-2008 FMA FPU

・Cryptographic coprocessor

(AES/SHA/CRC/…)

・6GFLOPS SP per core

Since MPPA® can run different OS and applications for each cluster,

A system that used to be implemented in separate devices for each function can now be implemented on a single chip.

Neural network implementation tool “KaNN”

Kalray Neural Network (KaNN)

compatibility

Due to its dynamic network topology, KaNN is compatible with any framework.

modular design

You can run your own DNN because it is divided into modules for each function.

Convenience

Implementation code can be generated from NN model parameters and training data, making prototyping easier and accelerating CNN development.

low latency

Leveraging MPPA®'s unique parallel processing capabilities, KaNN can perform DNN inference faster than ever before.

Customizable

New layers can be added to suit your needs.

Built-in code generator

A generator that produces human-readable C language code that makes the mapping of NNs on MPPA® understandable and easily modifiable.

multi application

Parallel processing flexibility allows DNNs to be processed concurrently while other applications are running without sacrificing performance or reliability.

Inquiry

Please feel free to contact us for any questions or consultations.