Have you ever heard the word "SLAM"? In this column, we will explain the basics and utilization of "SLAM" technology, which is used in various applications such as autonomous driving, AGV, and robots. We hope that you will deepen your understanding of SLAM from various perspectives, such as SLAM concepts, types, and use cases, and that it will help you use SLAM.

What is SLAM?

SLAM is an acronym for "Simultaneous Localization and Mapping". In Japanese, it becomes ``Simultaneous'', ``Localization'', ``and'', and ``Mapping'', which means ``locating and mapping at the same time''. Even if it is called a technology that "locates and creates maps at the same time," it may be difficult to imagine, so I will explain it in a little more detail.

First of all, please see the figure below. For example, let's say you were brought to a place blindfolded. After removing the blindfold, you will first see what is around you and search for the place that fits from your memory. Then, in your mind, you can create a map based on the information about the surroundings obtained from your eyes, and if it matches the location you know, you will be able to pinpoint the exact location. If it's a place you don't know at all, I think you can pinpoint the location by walking around from a blank state and making a map in your head until you reach a place you know.

If you replace this with SLAM, you can technically identify where you are on the map and which direction you are facing, and you can also obtain various information such as what is around you. It means that we are simultaneously doing "map creation" to collect and grasp the surrounding environment.

In general, SLAM is a general term for "technology that performs self-localization and environmental map creation at the same time" because it is often expressed as "localization" and "mapping".

Utilization of SLAM

SLAM technology allows you to know where you are and what your surroundings are like, so it can be applied to various applications such as autonomous driving, drones, AGVs, robots, AR/VR/MR, etc. . In both cases, it is very important to accurately grasp the self-position and the surrounding map.

In the case of autonomous driving or drones, SLAM can calculate the exact distance between itself and surrounding obstacles and landmarks, and based on that information, it is possible to determine the next course of action, such as avoiding obstacles. . In addition, AGVs and robots need to accurately grasp their own position and surrounding map in order to move to a destination or pick up an object with an attached arm. In this way, SLAM can be used as a "machine eye" in many use cases.

SLAM and sensors

In order to realize SLAM in autonomous driving, AGV, etc., it is necessary for the machine to grasp the surrounding conditions of the own vehicle. To do so, it is necessary to use sensors such as LiDAR (laser scanners), cameras, and ToF sensors to sense external conditions. Each of these sensors has its advantages and disadvantages, so it is necessary to select the optimum sensor according to the required specifications of the application.

LiDAR has excellent ranging accuracy and a long maximum detection distance, but it is still a bit expensive at present. Cameras have high resolution and can distinguish colors, but they are less resistant to environments such as fog and darkness. For example, for a car moving at high speed on the road, it is necessary to accurately grasp the distance to the object in front, so LiDAR may be the best, and for AGV moving at low speed, 2D code etc. If you need to read the information on a camera, a camera may be the better choice. Your budget for the sensor will also be an important factor in your selection.

In order to realize SLAM, it is important to select the optimal sensor according to the use case.

Other sensors include IMU, odometry, and GNSS. An inertial measurement unit called an IMU can acquire information such as acceleration and angular velocity. For example, odometry is a method of calculating the amount of movement from the total amount of a certain amount, such as walking 10 steps with a stride length of 1 m and moving 10 m. GNSS is a general term for satellite positioning systems including GPS, and it is possible to obtain the self-position by latitude and longitude in an environment where radio waves transmitted from satellites can be received.

The information input frequency, or frequency, from these sensors is different for each sensor. For example, LiDAR is sent 10 to 30 times per second, camera is sent 30 to 60 times per second, and IMU is sent more frequently. will be entered.

Depending on the intended application, what kind of sensors to use and how to fuse sensor information are required for purposes such as obtaining self-location information with appropriate accuracy or mapping the surrounding environment. . It is very important how to combine this information and connect it to the accuracy of self-localization and environment map generation.

SLAM types

Next, I will introduce the types of SLAM. SLAM is broadly classified into three types according to the difference in input sensors. They are LiDAR SLAM using LiDAR as input, Visual SLAM using cameras, and Depth SLAM using ranging information from ToF sensors. In addition, by combining multiple sensor information, it can be used for use cases that require wide area and high accuracy. Let's take a look at the characteristics of each SLAM.

LiDAR SLAM

LiDAR SLAM is SLAM technology realized by data acquired from LiDAR (laser scanner). The output value of LiDAR is 2D (X, Y coordinates) or 3D (X, Y, Z coordinates) point cloud data, and the distance to an object can be measured with extremely high accuracy. In addition, LiDAR excels in distance measurement accuracy at long distances compared to cameras, and can generate more accurate maps. However, in general, it is difficult to acquire point cloud data in an environment with few obstacles to be detected in the vicinity, and there are also issues such as a large data processing load.

The following video introduces the state of LiDAR SLAM.

The upper right of the screen shows the information of the surrounding objects detected by LiDAR. In the upper left of the screen, the information from LiDAR and the image of the in-vehicle camera are superimposed. The bottom half of the screen shows how a 3D map is being created, and the light blue line represents the trajectory of the self-position. You can see that it detects surrounding objects with extremely high accuracy and creates a 3D map of the places it passes through in real time.

Visual SLAM

Visual SLAM is a SLAM technology realized by images from a camera. Cameras include monocular cameras with only one lens and compound-eye stereo cameras with multiple lenses, and measure the distance to objects seen around them. The cost advantage is great because it uses a relatively inexpensive camera, and Visual SLAM is often combined with other sensors such as IMU and ToF.

The following video introduces the state of Visual SLAM.

This image was taken using a monocular camera, but based on the input from the camera, the surrounding objects are recognized as a large number of feature points. You should see a purple “X” mark on the camera image. This is done by recognizing the feature points of the object and calculating the distance to the feature points from the changes in the image due to changes in the distance and angle from the camera. At the same time, you can see how the green point cloud creates a 3D map of the surrounding environment, as shown in the upper left of the screen.

Depth SLAM

Depth SLAM is a SLAM technology that uses depth images (distance information) obtained from sensors. Sensors mainly use ToF sensors and depth cameras to measure the distance to objects that can be seen in the surroundings. SLAM can be executed even in an environment with few feature points or in a dark place, which Visual SLAM is not good at.

The following video introduces the state of Depth SLAM.

The bottom of the screen shows the image acquired from the depth camera. The upper left of the screen shows the information from the camera image and the feature points (green points) detected from the camera image. Based on these two pieces of information, you can see how 3D mapping (purple dots) and self-location recognition (green square pyramid) are being performed in the upper right of the screen.

Application of SLAM to robots (ROS)

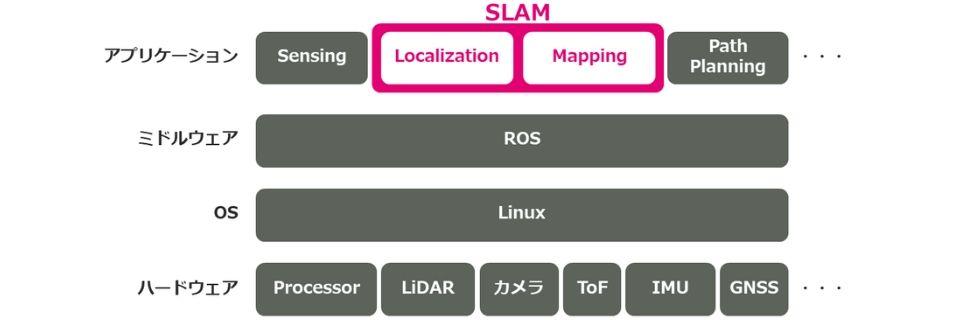

Here, I will explain the concept of incorporating SLAM into a robot. The figure below shows the layer structure of the hardware such as processors and sensors that make up the robot, the OS, middleware, and applications that are the upper layers.

As mentioned earlier, there are multiple inputs from the sensors to the SLAM block at different frequencies. By fusing information from multiple sensors in the SLAM block, the SLAM algorithm outputs self-position and attitude with appropriate accuracy. Using that information, the route planning module calculates what kind of route the vehicle will take to reach the destination, and based on the results, the control of the driving parts such as the tires is performed. By repeating this process continuously, the robot moves toward its destination while recognizing its own position. In order to apply SLAM to robots, it is necessary to understand how to implement SLAM using ROS (Robot Operating System) and various libraries.

SLAM introduction support service

Finally, SLAM is expected to be used in a variety of use cases as a technology that simultaneously estimates self-location and creates environmental maps. At Macnica, the Company provide a `` SLAM Implementation Support Service'' that provides total support for the use of SLAM in customer environments, including consulting on the most suitable sensor for each use case, provision of licenses, and technical support from implementation.

Please download the materials regarding SLAM introduction support service from here. We provide detailed information on service content and processes.

Inquiry

the Company are demonstrating SLAM with various sensors such as LiDAR, stereo cameras, and ToF sensors. In addition to demonstrations using various cameras that we visited at the office, it is also possible to conduct LiDAR SLAM demonstrations using the Company own vehicles.

If you have any questions about SLAM or would like a demonstration, please feel free to contact us using the form below.