This time, I will explain how to implement deep learning inference on Jetson. We strongly recommend you use TensorRT for this, but we have summarized the other options in the table below.

[Jetson video processing programming]

Episode 1 What you can do with JetPack and SDK provided by NVIDIA

Episode 2 Video input (CSI-connected Libargus-compliant camera)

Episode 3 Video input (USB-connected V4L2-compliant camera)

Episode 4 Resize and format conversion

Episode 9 Deep Learning Inference

Episode 10 Maximum Use of Computing Resources

| APIs | programming language | comment |

| NVIDIA TensorRT |

・C++ ・Python |

- High-speed inference engine for Jetson and NVIDIA GPU cards ・Convert the model trained in the deep learning framework into TensorRT format and use it - Supports accelerators such as DLA |

| NVIDIA cuDNN |

・C |

- CUDA library for processing deep neural networks Most deep learning frameworks call this library from within their frameworks, but it is also possible to use the cuDNN API directly from user applications. |

| TensorFlow |

・Python |

・Although it is primarily intended for learning, it can also be used for inference. |

| PyTorch |

・Python |

・Although it is primarily intended for learning, it can also be used for inference. |

Using TensorRT

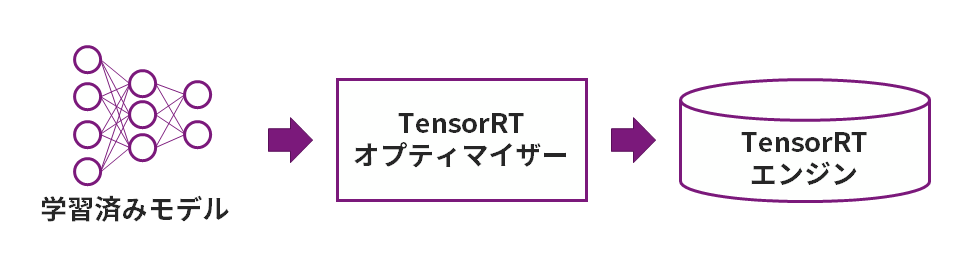

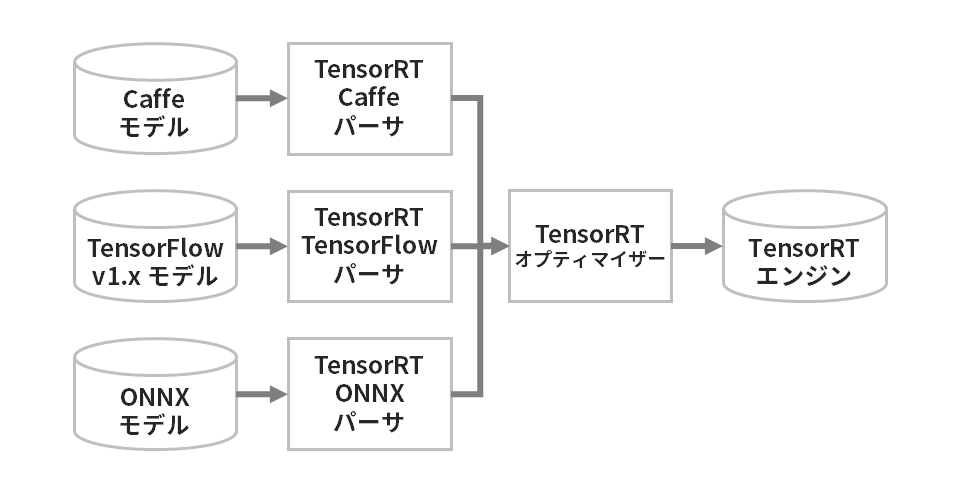

To use TensorRT, you need to convert your trained model to TensorRT format (called TensorRT serialized engine or TensorRT plan file).

There are multiple model formats that can be converted to TensorRT format, but since ONNX, which is a common format for trained models, is supported, it is common to convert via this ONNX format.

Details on how to use TensorRT are available in the 5-episode article and sample code. Please take a look.

Techniques for accelerating deep learning inference

Episode 1: Overview of NVIDIA TensorRT

Episode 2: How to use NVIDIA TensorRT ONNX

Episode 3: How to use NVIDIA TensorRT - torch2trt

Episode 4: How to use NVIDIA TensorRT: TensorRT API

Episode 5: How to create NVIDIA TensorRT custom layers

Using cuDNN

By using the cuDNN API, users can define the neural network in detail. However, the user needs to set the learned weights and bias values using the cuDNN API, which increases the programming man-hours. We think that cuDNN should be used directly only when the user wants to define a special processing layer, and normally TensorRT, which was introduced earlier, should be used.

cuDNN sample program

For cuDNN 8.x, there is a sample program in the following directory on Jetson.

/usr/src/cudnn_samples_v8

| sample | content |

| conv_sample | Example of using the convolutional layer processing function |

| mnistCUDNN | MNIST with cuDNN |

| multiHeadAttention | Multi-head attention API usage example |

| RNN | Processing example of recurring neural network |

| RNN_v8.0 | Processing example of recurring neural network |

Using TensorFlow

TensorFlow with Jetson built-in GPU enabled is released by NVIDIA.

Installing TensorFlow for Jetson Platform

It can also be run in a Docker container.

NVIDIA NGC Catalog > Containers > NVIDIA L4T TensorFlow (JetPack 5 and earlier)

NVIDIA NGC Catalog > Containers > TensorFlow (JetPack 6 and later, tags ending in "-igpu" are for Jetson)

Using PyTorch

NVIDIA has released PyTorch with the Jetson built-in GPU enabled.

Installing PyTorch for Jetson Platform

It can also be run in a Docker container.

NVIDIA NGC Catalog > Containers > NVIDIA L4T PyTorch (JetPack 5 and earlier)

NVIDIA NGC Catalog > Containers > PyTorch (JetPack 6 and later, tags ending in "-igpu" are for Jetson)

Finish next time! I will explain how to maximize the use of computing resources!

How was the 9th episode of the serialized article "Jetson Video Processing Programming", introducing deep learning inference?

In the next and final episode, we will introduce how to make the most of your computing resources.

If you have any questions, please feel free to contact us.

We offer selection and support for hardware NVIDIA GPU cards and GPU workstations, as well as facial recognition, route analysis, skeleton detection algorithms, and learning environment construction services. If you have any problems, please feel free to contact us.