連載記事第6話は、動画のエンコード方法についてご紹介します。

[Jetsonビデオ処理プログラミング]

第1話 NVIDIA提供 JetPackとSDKでできること

第2話 ビデオ入力(CSI接続のLibargus準拠カメラ)

第6話 動画エンコード

今回は、動画のエンコード方法について考えてみます。動画エンコード処理は非常に計算量の多い処理です。CPUでも処理可能ではありますが、そうすると動画エンコード処理でCPUリソースの多くを占有してしまい非効率です。Jetsonには動画エンコード専用ハードウェアアクセラレーター NVIDIA Video Encoder Engine(NVENC)が備わっていますので、通常は、このNVENCを利用して動画のエンコードをおこないます。ハードウェアアクセラレーターと言っても心配は要りません。マルチメディア処理フレームワークとして有名なGStreamerを使って、NVENCによる高速エンコードを利用できます。または、V4L2 APIに準拠したJetson Multimedia APIからもNVENCによる高速エンコードを利用可能です。

実装方法

NVENCによる動画エンコードを実装する方法は以下のとおりです。

| API | プログラミング言語 | コメント |

| GStreamer |

C Python |

|

| Jetson Multimedia API | C |

|

GStreamerを利用

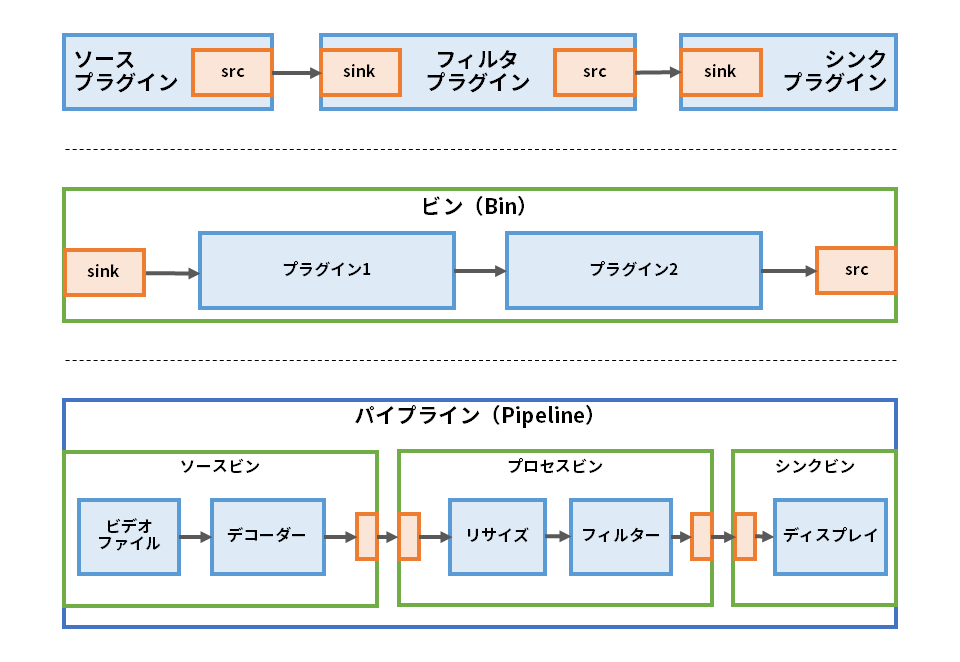

GStreamerフレームワークでは、プラグインと呼ばれる部品を組み合わせてマルチメディア処理を組み立てます。動画エンコード用プラグインがNVIDIA社から提供されていますので、これらを使います。対応するコーデック(H.264, H.265, VP8, VP9)毎にそれぞれプラグインが存在します。JetPackに含まれていますので、すぐに使えます。

| コンポーネント | 機能 |

|

プラグイン |

|

| ビン BIN |

|

| パイプライン PIPELINE |

|

GStreamerパイプラインのプロトタイピング

GStreamerをアプリケーションへ組み込むためには、通常、C言語またはPythonによるプログラミングが必要です。ただし、gst-launch-1.0というコマンドにより、コマンドラインで、GStreamerパイプラインのプロトタイピングが可能です。

このプロトタイピングを手軽に試していただくために、弊社でサンプルコマンド生成するシェルスクリプトを用意しました。以下のGitHubリポジトリーからダウンロードしてお使いください。

https://github.com/MACNICA-CLAVIS-NV/nvgst-venc-pipes

シェルスクリプトの詳細な使い方はGitHubリポジトリーに書かれた説明をご覧いただくこととして、まずは、試してみましょう。

V4L2カメラからの画像をエンコードしてファイル保存

$ ./v4l2cam_enc.shウェブカメラなどV4L2準拠のカメラから取り込んだ画像をH.264コーデックでエンコードし、生成されたビットストリームをMP4形式でファイル保存します。コマンドオプションにより、H.265コーデックへ変更できます。動作中に表示されるのは、カメラからの取り込んでいる画像です。

終了はCtrl-Cです。

このスクリプトにより生成されるコマンドは、pipeline.txtファイルに保存されます。

gst-launch-1.0 -e v4l2src device=/dev/video0 ! "video/x-raw, width=(int)640, height=(int)480, framerate=(fraction)30/1" ! videoconvert ! "video/x-raw, format=(string)NV12" ! nvvidconv ! "video/x-raw(memory:NVMM), format=(string)NV12" ! tee name=t t. ! queue ! nvv4l2h264enc bitrate=4000000 control-rate=1 iframeinterval=30 bufapi-version=false peak-bitrate=0 quant-i-frames=4294967295 quant-p-frames=4294967295 quant-b-frames=4294967295 preset-level=1 qp-range="0,51:0,51:0,51" vbv-size=4000000 MeasureEncoderLatency=false ratecontrol-enable=true maxperf-enable=false idrinterval=256 profile=0 insert-vui=false insert-sps-pps=false insert-aud=false num-B-Frames=0 disable-cabac=false bit-packetization=false SliceIntraRefreshInterval=0 EnableTwopassCBR=false EnableMVBufferMeta=false slice-header-spacing=0 num-Ref-Frames=1 poc-type=0 ! h264parse ! qtmux ! filesink location=output.mp4 t. ! queue ! nvegltransform ! nveglglessinkV4L2カメラからの画像をエンコードしてデコード結果をリアルタイム表示

$ ./v4l2cam_encdec.sh今度は、エンコードで生成されたビットストリームを、共有メモリーを介して、受信側のGStreamerパイプラインでデコードしリアルタイムで表示します。

送信側で実行されるコマンド(v4l2cam_encdec.shから自動的に起動)

gst-launch-1.0 -e v4l2src device=/dev/video0 ! "video/x-raw, width=(int)640, height=(int)480, framerate=(fraction)30/1" ! videoconvert ! "video/x-raw, format=(string)NV12" ! nvvidconv ! "video/x-raw(memory:NVMM), format=(string)NV12" ! queue ! nvv4l2h264enc bitrate=4000000 control-rate=1 iframeinterval=30 bufapi-version=false peak-bitrate=0 quant-i-frames=4294967295 quant-p-frames=4294967295 quant-b-frames=4294967295 preset-level=1 qp-range="0,51:0,51:0,51" vbv-size=4000000 MeasureEncoderLatency=false ratecontrol-enable=true maxperf-enable=false idrinterval=256 profile=0 insert-vui=false insert-sps-pps=false insert-aud=false num-B-Frames=0 disable-cabac=false bit-packetization=false SliceIntraRefreshInterval=0 EnableTwopassCBR=false EnableMVBufferMeta=false slice-header-spacing=0 num-Ref-Frames=1 poc-type=0 ! h264parse config-interval=1 ! shmsink socket-path=/tmp/foo wait-for-connection=false shm-size=268435456受信側で実行されるコマンド(v4l2cam_encdec.shから自動的に起動)

GST_SHARK_CTF_DISABLE=TRUE GST_DEBUG="*:0" GST_TRACERS="" gst-launch-1.0 -e shmsrc socket-path=/tmp/foo is-live=true ! h264parse ! queue ! nvv4l2decoder ! nvegltransform ! nveglglessinkCSIカメラからの画像をエンコードしてファイル保存

$ ./arguscam_enc.sh実行されるコマンドは以下のとおりです。

gst-launch-1.0 -e nvarguscamerasrc sensor-id=0 ! "video/x-raw(memory:NVMM), width=640, height=480, format=NV12, framerate=(fraction)30/1" ! tee name=t t. ! queue ! nvv4l2h264enc bitrate=4000000 control-rate=1 iframeinterval=30 bufapi-version=false peak-bitrate=0 quant-i-frames=4294967295 quant-p-frames=4294967295 quant-b-frames=4294967295 preset-level=1 qp-range="0,51:0,51:0,51" vbv-size=4000000 MeasureEncoderLatency=false ratecontrol-enable=true maxperf-enable=false idrinterval=256 profile=0 insert-vui=false insert-sps-pps=false insert-aud=false num-B-Frames=0 disable-cabac=false bit-packetization=false SliceIntraRefreshInterval=0 EnableTwopassCBR=false EnableMVBufferMeta=false slice-header-spacing=0 num-Ref-Frames=1 poc-type=0 ! h264parse ! qtmux ! filesink location=output.mp4 t. ! queue ! nvegltransform ! nveglglessinkCSIカメラからの画像をエンコードしてデコード結果をリアルタイム表示

$ ./arguscam_encdec.sh実行されるコマンドは以下のとおりです。

送信側で実行されるコマンド(arguscam_encdec.shから自動的に起動)

gst-launch-1.0 -e nvarguscamerasrc sensor-id=0 ! "video/x-raw(memory:NVMM), width=640, height=480, format=NV12, framerate=(fraction)30/1" ! queue ! nvv4l2h264enc bitrate=4000000 control-rate=1 iframeinterval=30 bufapi-version=false peak-bitrate=0 quant-i-frames=4294967295 quant-p-frames=4294967295 quant-b-frames=4294967295 preset-level=1 qp-range="0,51:0,51:0,51" vbv-size=4000000 MeasureEncoderLatency=false ratecontrol-enable=true maxperf-enable=false idrinterval=256 profile=0 insert-vui=false insert-sps-pps=false insert-aud=false num-B-Frames=0 disable-cabac=false bit-packetization=false SliceIntraRefreshInterval=0 EnableTwopassCBR=false EnableMVBufferMeta=false slice-header-spacing=0 num-Ref-Frames=1 poc-type=0 ! h264parse config-interval=1 ! shmsink socket-path=/tmp/foo wait-for-connection=false shm-size=268435456受信側で実行されるコマンド(arguscam_encdec.shから自動的に起動)

GST_SHARK_CTF_DISABLE=TRUE GST_DEBUG="*:0" GST_TRACERS="" gst-launch-1.0 -e shmsrc socket-path=/tmp/foo is-live=true ! h264parse ! queue ! nvv4l2decoder ! nvegltransform ! nveglglessinkGStreamer API

コマンドラインでプロトタイピングの後は、GStreamer APIでアプリケーションへ組み込みます。

NVIDIA DeepStream SDKはGStreamerをベースにしていますので、DeepStream SDKの情報も参考になります。

OpenCVからGStreamerを利用

JetPackでインストールされるデフォルトのOpenCVはJetson固有のハードウェアアクセラレーターが有効になっていませんが、OpenCV VideoWriterのバックエンドをGStreamerとすることで、OpenCVからJetsonのNVENCによる高速動画エンコードが利用できます。

appsrcプラグインでOpenCVからのストリームデータを取得できます。

import cv2

import sys

FILE = 'test.mp4'

GST_COMMAND = 'appsrc ! videoconvert ! nvvidconv ! video/x-raw(memory:NVMM), format=(string)I420 ! nvv4l2h264enc bitrate=10000000 ! video/x-h264, stream-format=(string)byte-stream ! h264parse ! qtmux ! filesink location={}'.format(FILE)

WIDTH = 640

HEIGHT = 480

cam_id = 0

cap = cv2.VideoCapture(cam_id)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, WIDTH)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, HEIGHT)

out = cv2.VideoWriter(GST_COMMAND, 0, 30, (WIDTH, HEIGHT))

if not cap.isOpened():

print("Cannot open camera")

sys.exit()

while True:

ret, frame = cap.read()

cv2.imshow("Test", frame)

out.write(frame)

key = cv2.waitKey(1)

if key == 27: # ESC

break

cv2.destroyAllWindows()

cap.release()

sys.exit()Multimedia APIを利用

Jetson Multimedia APIに含まれるV4L2 Video EncoderでもNVENCを利用した動画エンコードが利用可能です。

利用方法は、JetPackに含まれるサンプルコードを参照ください。サンプルコードはJetson上の以下のパスに存在します。

/usr/src/jetson_multimedia_api/samples

次回は、動画デコードについて解説します!

連載記事「Jetsonビデオ処理プログラミング」の第6話、動画エンコードについてご紹介しましたがいかがでしたでしょうか。

次回は動画のデコードについてご紹介します。

お困りのことがあれば、ぜひお問い合わせください

弊社ではハードウェアのNVIDIA GPUカードやGPUワークステーションの選定やサポート、また顔認証、導線分析、骨格検知のアルゴリズム、さらに学習環境構築サービスなどを取り揃えております。お困りの際は、ぜひお問い合わせください。