Last time, we actually built an AI demo that detects people into an FPGA and checked its operation and power consumption. Seeing how it actually works and the low power consumption has boosted my motivation for the future.

Before the escape of the mind begins...

【table of contents】

2. Lattice AI Solution Development Flow

3. A closer look at the neural network model training environment

Download Reference Design

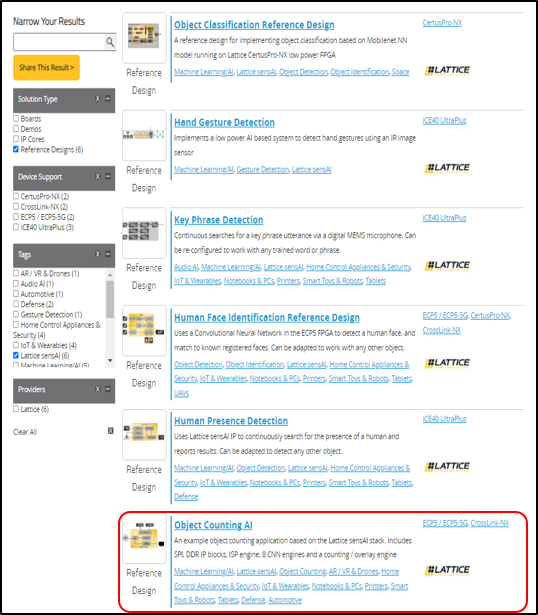

Even if there is nothing, the story will not start unless the reference design is downloaded first, so I will download it. As introduced in the previous article, Lattice provides multiple types of reference designs. Select "Object Counting AI" in it.

* Reference design introduction site URL

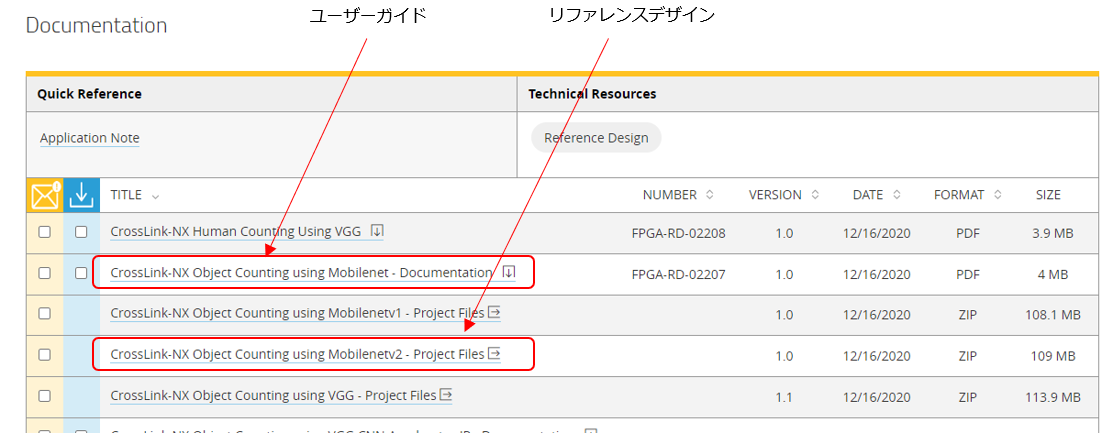

There are download links for documents and reference designs at the bottom of the site, but this time I will download the reference design "CrossLink-NX Object Counting using Mobilenetv2"

*CrossLink-NX Object Counting Reference Design User Guide

*CrossLink-NX Object Counting Reference Design project file

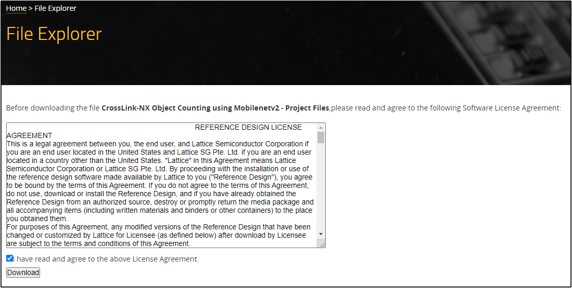

When downloading the project file, there is a check to confirm that you agree to the License Agreement, so check the contents before downloading.

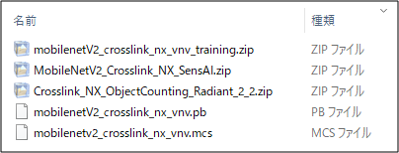

When you run Download, the zip file will be downloaded, so when you unzip it, the following files will appear. It contains 3 more zip files. These three zip files appear to be heavily involved in the development flow of Lattice's AI solutions.

Lattice's AI solution development flow

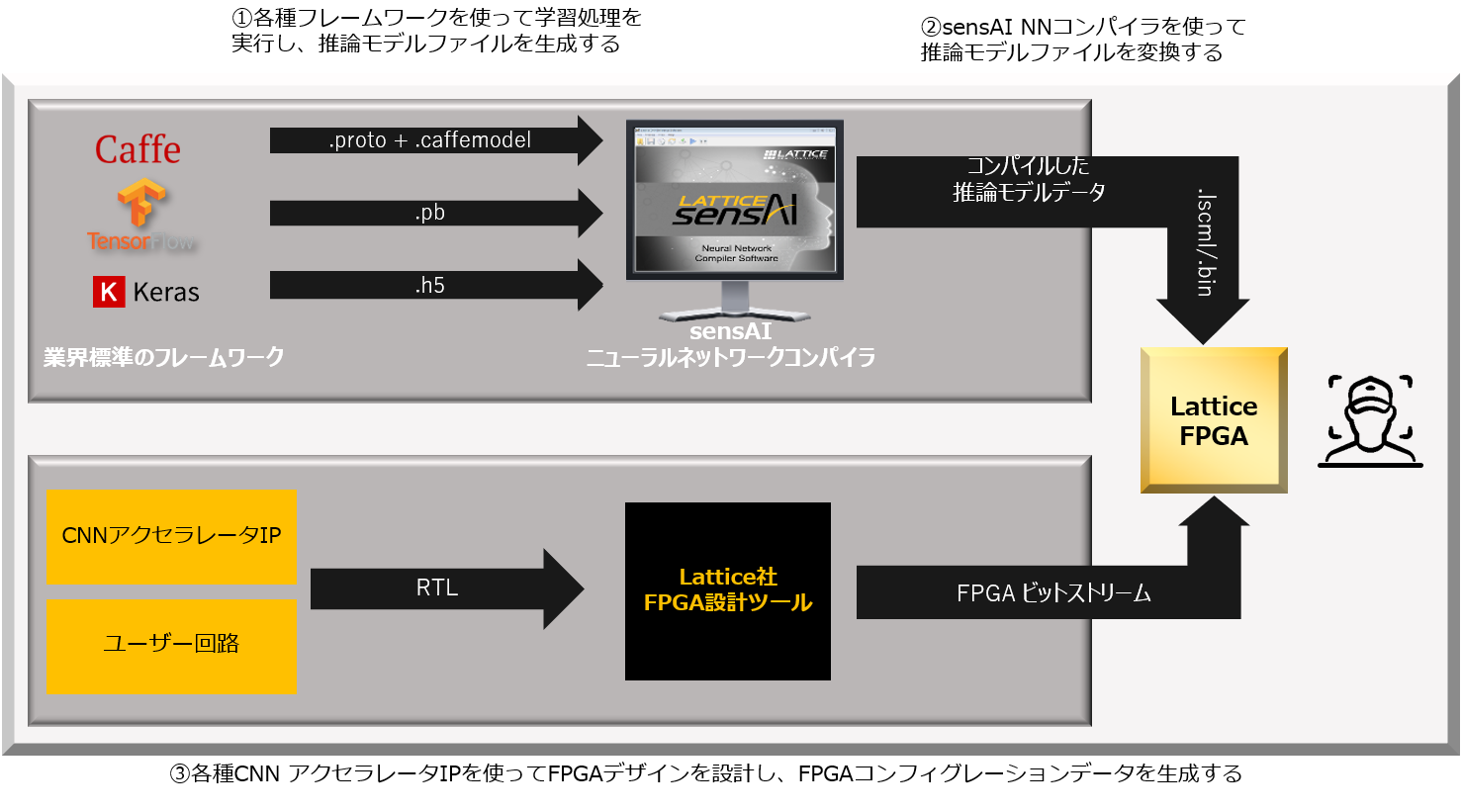

The development flow of Lattice's AI solution is shown in the diagram below. It seems that the three zip files mentioned above are provided in a form corresponding to each part of this flow (1, 2, 3).

The upper part of the flow is the neural network model development flow. Neural network models are generated on industry standard frameworks. Lattice provides a tool called sensAI Neural Network Compiler (hereinafter referred to as sensAI Compiler). By compiling a trained neural network model using this tool, it seems that it can be converted into a form that FPGA can interpret.

The lower part of the flow is the development flow for FPGA circuit design. The CNN accelerator IP will be responsible for the engine part of the AI processing that has been provided by Lattice's AI solution this time. Together with the peripheral user circuit, it is designed on Lattice's FPGA design tool. With both the neural network model information and the CNN accelerator IP embedded in the FPGA, it seems that inference processing by AI will be executed by giving some data.

To run this flow,

① Neural network model training environment (learning dataset, Python script, etc.)

(2) An environment for compiling the generated trained model with the sensAI compiler

(3) Environment for generating FPGA configuration data (RTL source code, etc.)

is required, but the zip file you downloaded earlier seems to contain a set of these environments compressed in zip format.

① mobilenetV2_crosslink_nx_vnv_training.zip

A training environment for neural network models. It is a file containing a group of files for generating a trained neural network model.

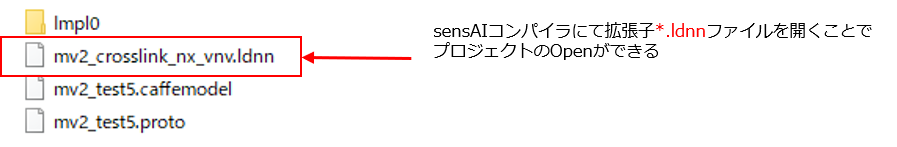

② MobileNetV2_Crosslink_NX_SensAI.zip

It is a file that contains the project file of the sensAI compiler. You can check the contents of the project by opening the *.ldnn file with the sensAI compiler.

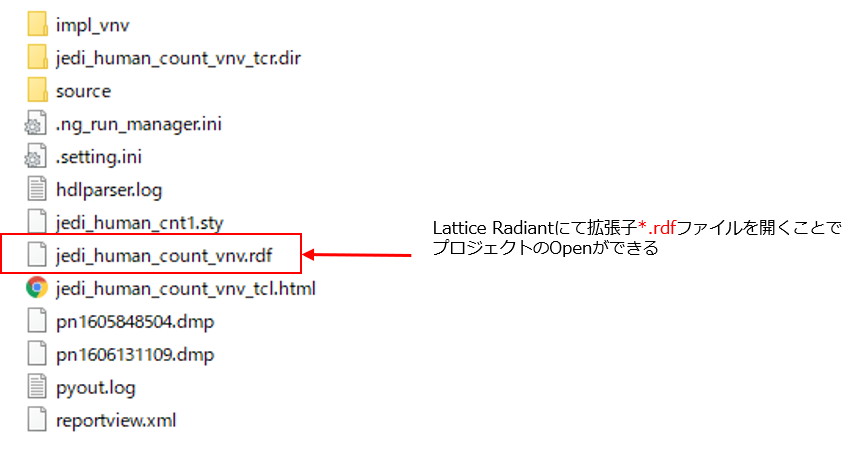

③ Crosslink_NX_ObjectCounting_Radiant_2_2.zip

It is a file that contains source code etc. for generating FPGA configuration data. It is provided in the form of a set of project files for Lattice Radiant (FPGA design tool from Lattice). You can check the contents of the project by opening the *.rpf file with Radiant.

There are many other AI reference designs provided by Lattice, and looking at the file structure of each reference design, it seems that most of them have the same file structure as this.

In addition, if you look at the reference design user guide, the training of the neural network model is described on the assumption that it will be performed on a Linux Ubuntu machine.

A closer look at the training environment for neural network models

Among them, I examined the training environment of the neural network model of ①, which is particularly worrisome.

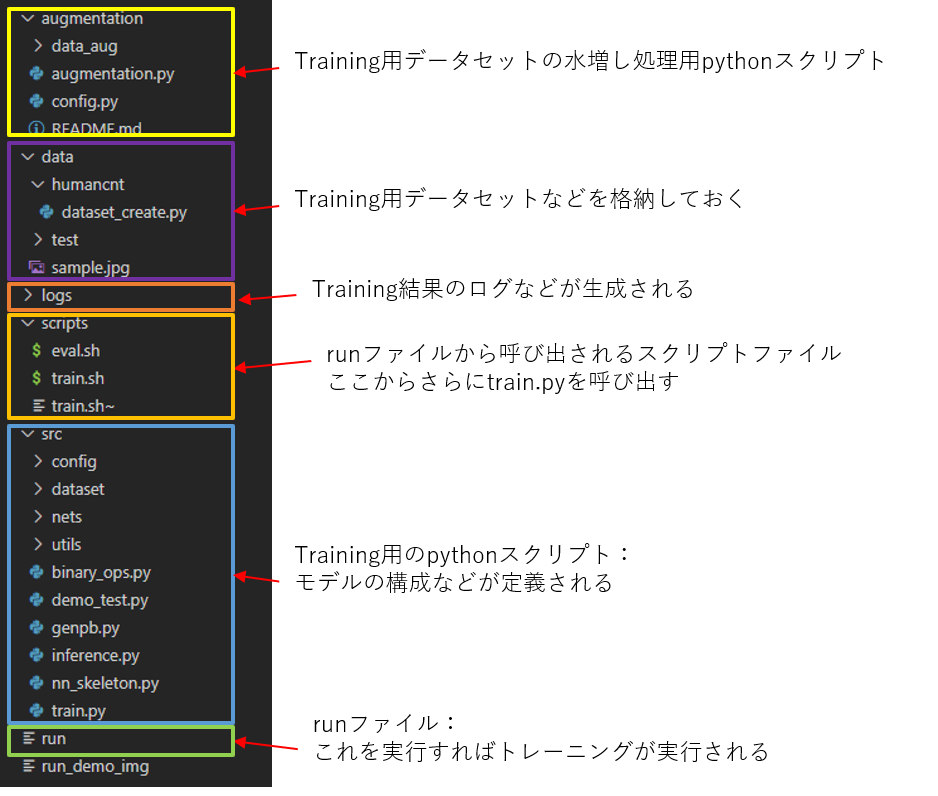

It seems that the folder structure is as follows.

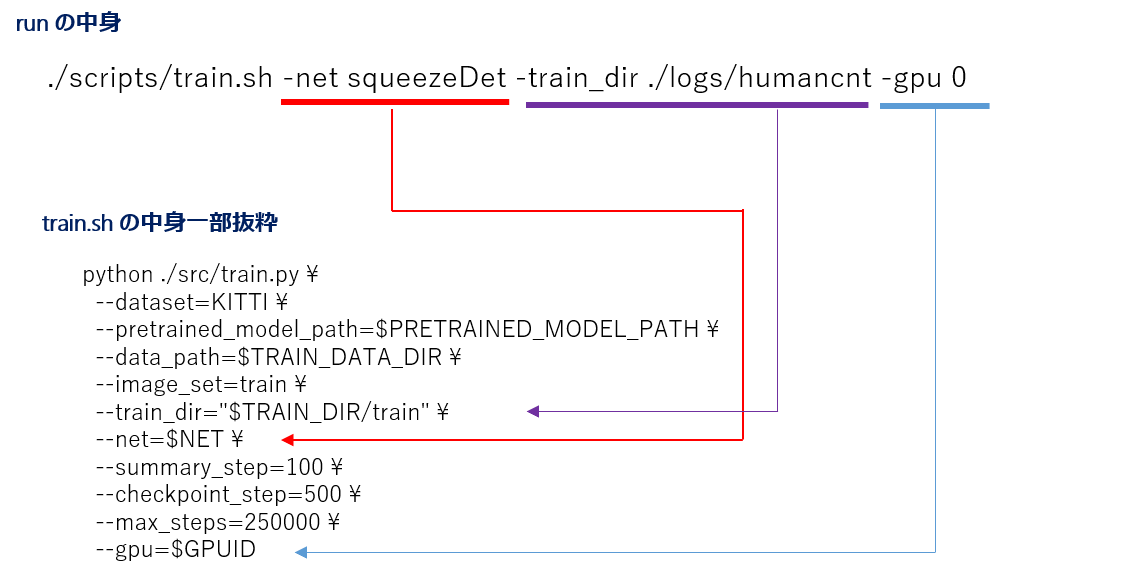

It seems that the neural network model training process itself is started by executing the run script in this. The run script reads train.sh, and train.py is executed from train.sh.

However, training a neural network model should require a large amount of training data... So I checked the reference design materials carefully and found a chapter called "Prepairing the Dataset"! In this human detection reference design, it seems that the data set called Open Image Dataset v4, which is provided free of charge by Google, is used as learning data for training.

Google Open Image Dataset v4

It seems that Google Open Image Dataset v4 is a collection of more than 9 million image data in total. Also, for each, it seems to be a data set in which a label indicating what the object in the image is and the coordinate information are paired. The latest version is v6, but this reference design uses v4. There are a total of 600 types (classes) of objects, and the human detection reference design uses data from the "Person" class. These conveniences are provided free of charge! As expected of Google.

It seems that the dataset can be downloaded from the Open Image Dataset site or Github, but if you want to download only the data of a specific class, it seems easier to use the Python script provided by Open Image Dataset. . Also, the run script mentioned above requires the execution of a shell script such as train.sh, and it seems difficult to execute it as it is in a Windows environment. When that happens, it seems better to prepare the Linux environment properly first.

Next time, I would like to investigate how to build a learning environment using a Linux machine.

Inquiry

Please feel free to contact us if you have any questions about the evaluation board or sample design, or if there is anything you would like us to cover in this blog!