NVIDIA Data Center GPU

NVIDIA Data Center GPUs accelerate demanding workloads such as HPC, enabling data scientists and researchers to process petabytes of data in applications ranging from energy exploration to deep learning, much faster than traditional CPUs. can be analyzed.

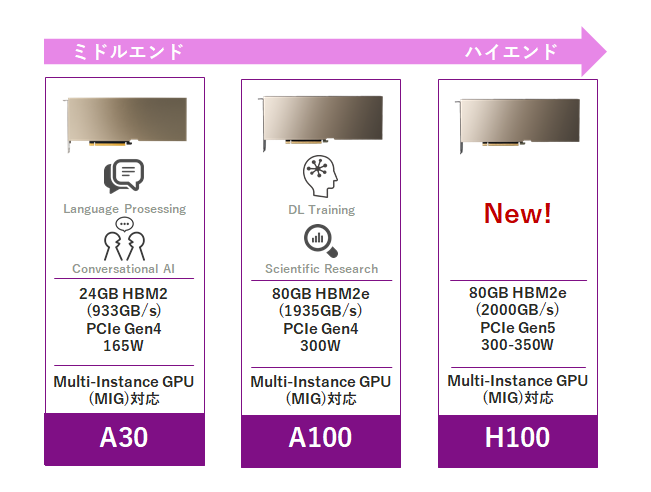

NVIDIA Data Center GPU Product List

NVIDIA Data Center GPUs come in two product lines, one for computing and one for graphics.

for computing

Computing products support multi-instance GPU (MIG), and one GPU can be divided into up to seven (NVIDIA A100/NVIDIA H100) for use.

Learn more about NVIDIA Multi-Instance GPUs

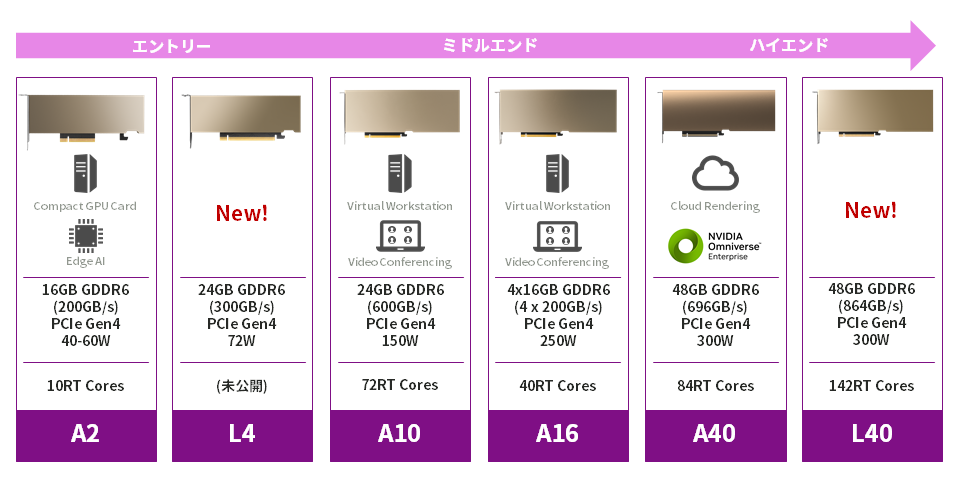

for graphics

Products for graphics are equipped with ray tracing (RT) cores installed in NVIDIA RTX™ products.

What is the difference between NVIDIA Data Center GPUs and NVIDIA RTX?

Fan/cooling function

NVIDIA RTX products are equipped with active cooling fans, but NVIDIA Data Center GPUs are passive cooling and do not have fans, and the server itself needs a cooling function. Data Center GPU reduces the risk of failure by not installing a fan, so Data Center GPU is recommended for use in data centers where it is difficult to replace GPU cards.

memory size and memory bandwidth

In the AI training phase, which deals with large amounts of data, the corresponding memory size and memory bandwidth are very important factors due to the large amount of data transfer to GPU memory. NVIDIA Data Center GP has a large memory size and memory bandwidth, making it an ideal product for AI/HPC. Below is a table comparing NVIDIA Data Center GPUs and NVIDIA RTX.

|

NVIDIA H100 (PCIe) |

NVIDIA RTX™ 6000 Ada Generation |

|

| Memory Size |

80GB HBM2e |

48GB GDDR6 |

| Memory BW(GB/s) |

2000 |

960 |

| Interconnect |

PCIe Gen5 |

PCIe Gen4 |