To SC09 (Super Computing 2009)

SC09 (Super Computing 2009) was held in Portland, Oregon, USA.

I would like to report on local information such as the latest trends in supercomputers and the exhibition contents of Mellanox and Voltaire.

The Oregon Convention Center, where SC was held this time, is easily accessible from Portland Airport, and can be reached in about 30 minutes by tram. It is a place where you can act without using a car even in the United States.

It takes about 10 minutes from the convention center to the city by tram, and there are hotels and other lodging facilities.

The exhibition hall itself was held for 4 days, and this time as well, there were many exhibitors from companies and universities, and it was crowded as usual.

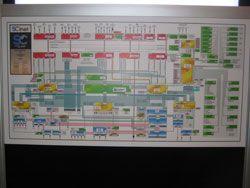

At the venue, a network that realized 100GE transmission was constructed by connecting switches and routers of each company.

Products from Mellanox and Voltaire, as well as transmission cable products handled by our company, were actually used in equipment within the broadband network, and were connected to InfiniBand products and 10GE products other than Mellanox and Voltaire.

When I used that network to access the Internet, I was a little impressed that it was working properly.

State of the Mellanox booth

Well, what's on display at the Mellanox booth...

I was personally interested in Mellanox's latest ConnectX-2 chip-equipped products, X12 (120Gbps) compatible switch modules, and cable products.

ConnectX-2 implements a new technology, MPI collective offload ( Core Direct ), which greatly reduces power consumption compared to the existing ConnectX.

The X12 switch module is also a new InfiniBand product recently released by Mellanox, which allows you to connect between large switches and create a higher bandwidth network.

Mellanox Booth Attention! 3D!

At the Mellanox booth demo, a joint demonstration with a 3D application (cars) caught my eye.

In a sense, it was almost like a game, displaying high density 3D data in real time over low latency InfiniBand and allowing the user to interact with it as if they were in a real car.

I felt that InfiniBand is reaching a level where it can be used in practical applications.

On the other hand, the Voltaire booth exhibited not only InfiniBand products, but also a large 10 Gigabit Ethernet (DCB compatible) switch (288 ports), original MPI accelerator technology, etc. It was also crowded as a broadband solution vendor like Mellanox. .

At the end

I feel that there were many reports on efficiency and performance improvements due to the combination of InfiniBand technology and GPUs at SC. Mellanox and Nvidia have announced reports that they are using InfiniBand as a technology (GPU-Direct) that reduces overhead between GPUs and improves application performance.

http://www.nvidia.com/object/io_1258539409179.html?_templateId=320

In addition, the 3D Internet was taken up as an example of an application where HPC will be used not only in the academic field but also in the commercial field in the future. We believe that the combination of InfiniBand and GPU technology will spread in Japan as well, as we can provide users with great added value.