Introduction

Hello, I'm Ushii.

The contents up to the last time were an introduction to building a "server", which is indispensable in an environment where Mellanox products are used.

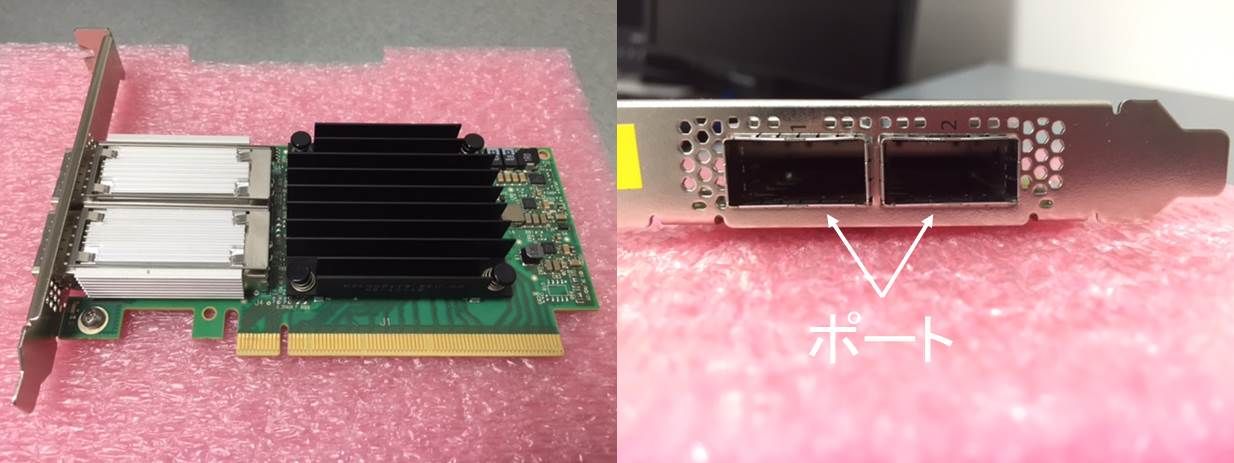

This time, I would like to write an article about Mellanox 's NIC (Network Interface Card).

Mellanox NIC

Mellanox develops and manufactures interconnect products such as chips, cables, NICs (Network Interface Cards) and switches.

NICs are used to add an "interface" to a server.

This time, in order to use the server in a 100GbE environment, I incorporated a Mellanox product called "ConnectX®-4 (Fig. 1)" into my own server!

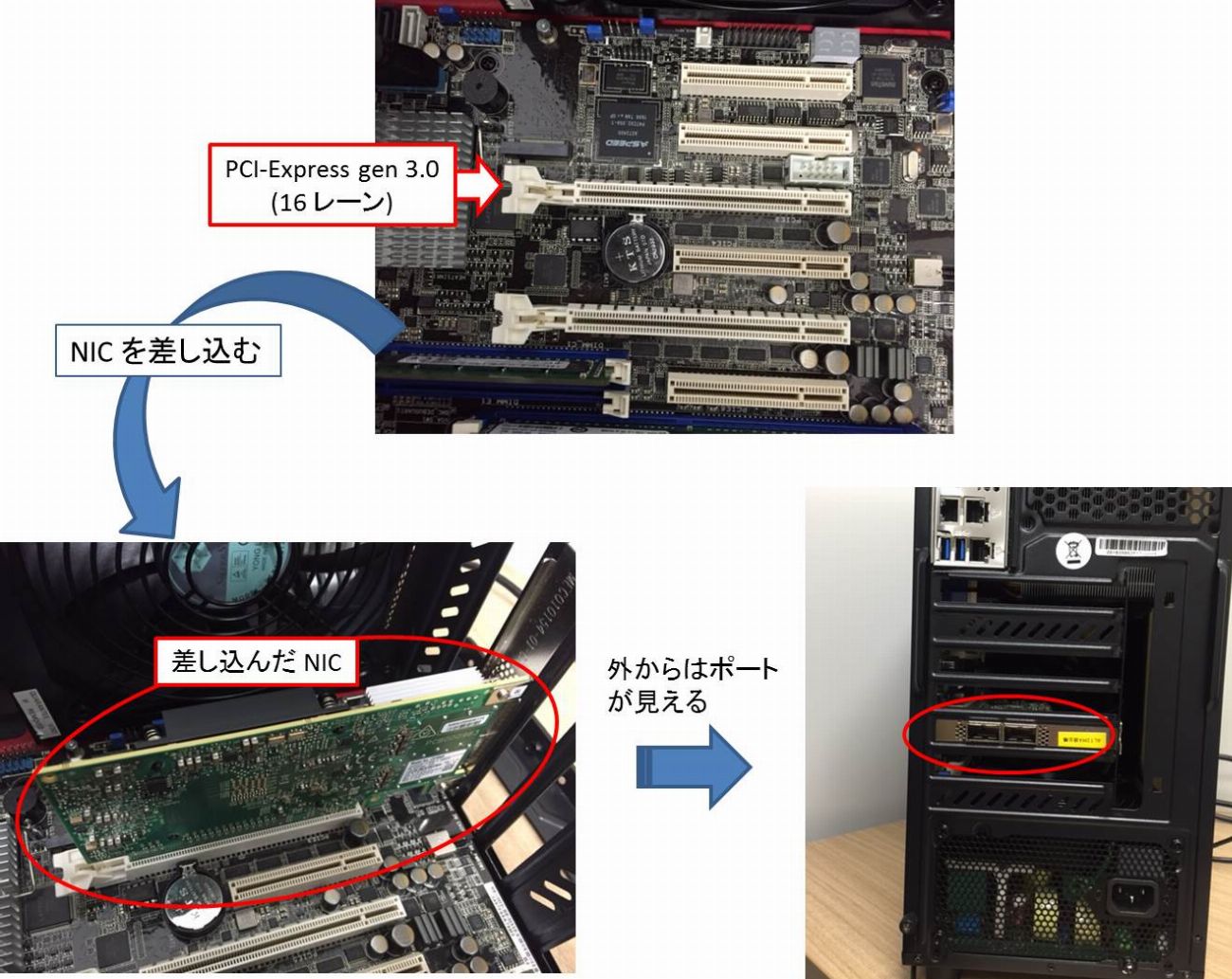

The NIC is enabled by plugging it into PCI-Express. (Figure 2)

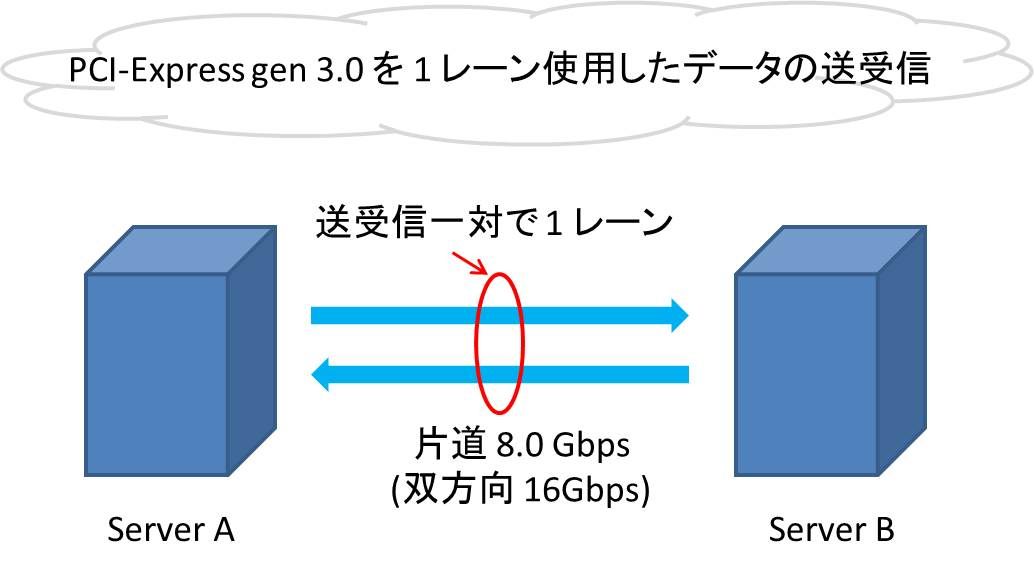

ConnectX-4 has 100Gbps bandwidth per port, so it needs 16 PCI-Express gen 3.0 lanes...why?

To answer that question, we need to know about PCI-Express data transfer. (Fig.3)

The data transfer bandwidth of PCI-Express gen 3.0 is 8.0Gbps one way.

In other words, 8.0Gbps × 16 lanes = 128Gbps (one way), and a 100GbE environment can be built.

*Unfortunately, even with 2 ports, only 1 port can be used in a 100GbE environment due to PCI standard restrictions. . .

Above I'm assuming that the NIC is plugged into a "PCI-Express gen 3.0" slot.

Here, if there is a standard called "gen 3.0", we can expect the existence of standards such as "gen 1.0 and gen 2.0".

In fact, there are also "gen 1.0 and gen 2.0".

*Strictly speaking, it is gen 1.1, not gen 1.0.

So what's the difference between these standards?

Right out of the box, the data transfer bandwidth is different! (table 1)

In fact, ConnectX-4 can be used not only with PCI-Express gen 3.0, but also with gen 1.0 and gen 2.0.

However, as can be seen from Table 1, even with 16 lanes, 1.0 and 2.0 cannot build a 100Gbps environment. . .

Please pay attention to the PCI-Express standard when building a network environment!

*Actually, PCI-Express gen 4.0 is coming soon! !

Deploying NICs

Now that we have resolved our doubts about the ConnectX-4 specification, let's build a NIC into our own server! (Fig.4)

Plug the NIC into the server as shown in Figure 4 and see if the server recognizes the NIC.

When the confirmation command "lspci | grep Mellanox" is executed,

【Execution result】

[root@localhost ~]# lspci | grep Mellanox

02:00.0 Infiniband controller: Mellanox Technologies MT27700 Family [ConnectX-4]

02:00.1 Infiniband controller: Mellanox Technologies MT27700 Family [ConnectX-4]

that?

Infiniband controller?

~To be continued~

at the end

This time, I wrote an article about "NIC" made by Mellanox.

As you can imagine, the desired 100GbE environment has not yet been completed.

The NIC is also recognized as an Infiniband controller, not "Ethernet". . .

So, we will continue to work hard to build a 100GbE environment next time.

Thank you, continue!

Finally, I would like to introduce “Mellanox,” which I am in charge of.

Profile of Mellanox

◇本社:ヨークナム (イスラエル)、サニーベル (アメリカ)

◇広帯域、低レイテンシーインターコネクトのリーディングカンパニー

・EDR 100 Gbps InfiniBand、/ 100 ギガビット Ethernet

・アプリケーションのデータ処理時間を大幅に削減

・データセンタサービス基盤の ROI を劇的に向上