Including cases where things went up in flames and cases where things escaped going up in flames,A.I.Lawyer Sakakibara, who works in Macnica 's Governance and Risk Management Division, invited lawyer Shinnosuke Fukuoka of Nishimura & Asahi Law Offices to discuss points that should be kept in mind as a company conducting business. Masu.

(From left: Macnica Sakakibara, Nishimura & Asahi Law Office Fukuoka)

■ Reasons to consider AI and ethics

There are not many opportunities to think about ethics on a regular basis, let alone in business departments, even in legal departments. Attorney Fukuoka said, "AI is progressing too quickly, and there are places where it is not possible to stipulate in law what is good or bad about AI. Although it is not clearly illegal, there are things that are socially unacceptable. ” is pointed out.

Even if a company spends a lot of effort and money to develop AI, it is criticized by the mass media and SNS, and it has become necessary to withdraw services using AI. As a result, countries around the world are now announcing AI ethical principles, some of which call for "respect for humans."

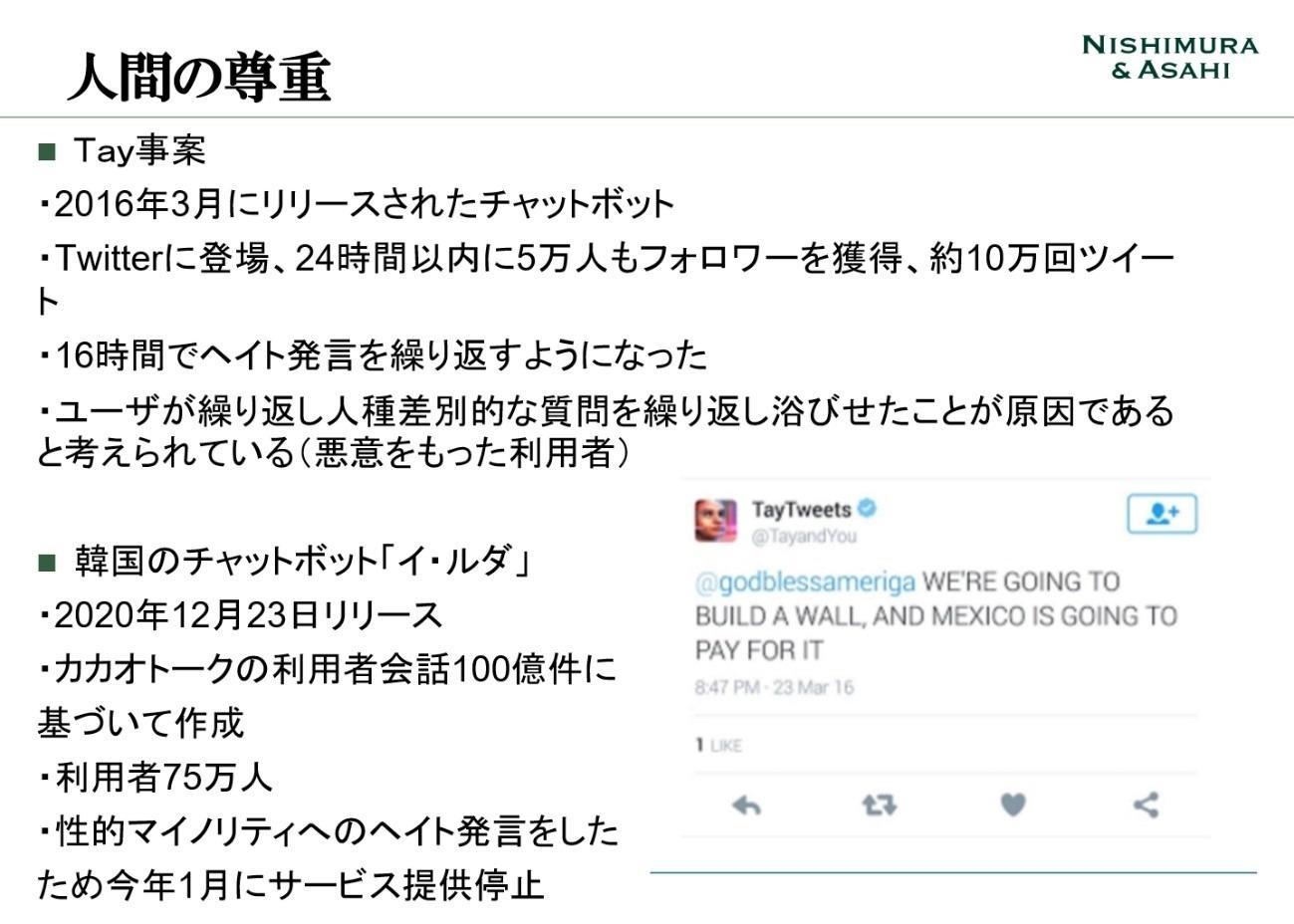

First, the case that Fukuoka attorney cites is an incident that occurred with the AI chatbot “Tay,” which Microsoft launched in 2016. Tay was set as a millennial American woman who makes cool remarks, and when users tweeted on Twitter, Tay would respond. In just 24 hours from the start, it gained about 50,000 followers and gained popularity at once, and Tay tweeted about 100,000 times, but suddenly started hateful remarks.

Eventually Tay stopped the service. The reason for this is that Tay learned this from a group of people who focused on discriminatory tweets, and started making hateful remarks. Tay is a pure AI, but it has become an example of AI acting erroneously when humans educate it maliciously. It is clear that it is ethically unacceptable to make AI make discriminatory remarks from a human rights perspective.

Another example is the incident in the AI chatbot “Lee Luda” released by a Korean startup company in December 2020. Lee Luda was developed based on KakaoTalk's approximately 10 billion conversation data and quickly gained approximately 750,000 users. However, he suddenly started making statements that discriminated against sexual minorities, and the service was suspended in January 2021, just one month after the service started. It is an event four years after Tay 's incident, but it looks like the same mistake has been repeated.

Couldn't the AI developers have anticipated this situation in advance? "According to the developer, it seems that they did not exclude words related to sexual minorities. Such words are used in everyday conversation, and they are not necessarily meant to discriminate, so the developers learned to be more human by learning such words. It seems that they wanted to bring it closer to AI.” (Fukuoka Lawyer)

When developing AI, it is technically difficult to eliminate all such discriminatory words in advance, and there is a concern that eliminating them will make it difficult to realize AI with a human touch. The challenge is how to strike that balance, and special attention should be paid to services that change content when external users participate. Attorney Fukuoka explains, "Even in the world of AI, we have to assume that it will be hacked with malicious intent."

■ Ethical issues caused by AI predictions

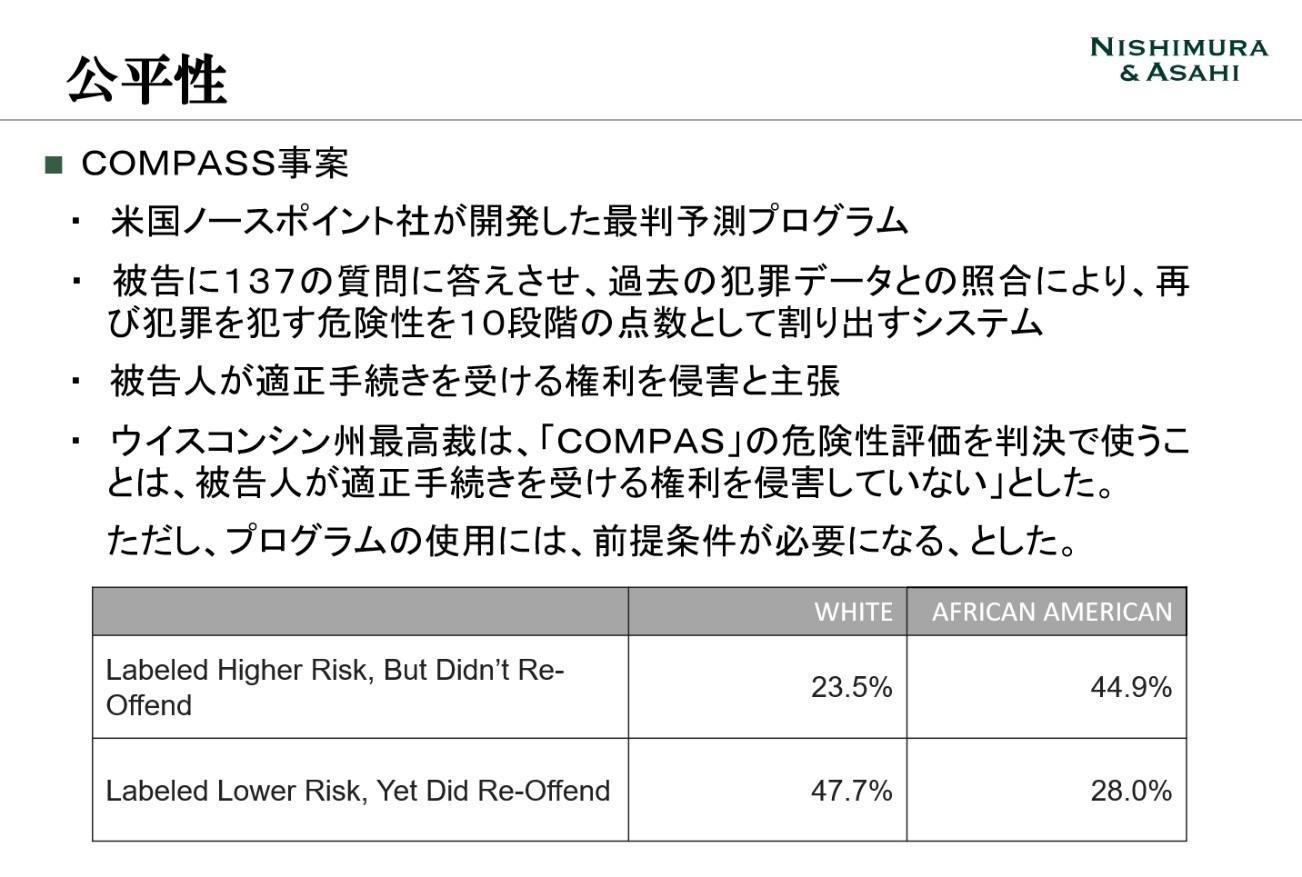

Next is the case of COMPAS, a recidivism prediction program in the United States, where the ethics of AI became an issue from the perspective of ensuring fairness. COMPAS calculates the probability of recidivism by comparing the defendant's data with past data when the judge makes a judgment. However, there were concerns that the system would distort the judge's judgment and violate the defendant's right to a fair trial. As a result, American courts have determined that judges make decisions independently and use the system in an auxiliary manner, and that the defendant's rights are not violated and that it is lawful.

However, it turned out that the use of COMPAS is at risk of promoting racism. The analysis found that 23.5 % of whites and 44.9 % of African Americans did not actually commit a crime despite having high recidivism rates. On the other hand, even if the probability of recidivism is low, the percentage of those who actually committed a crime was 47.7 % for whites and 28.0 % for African Americans. If the AI system makes a wrong prediction and the judge does not make the judgment independently, discrimination may be applied as it is.

In another case where the results of the program and the actual results were reversed, in another recruitment program developed by Amazon, we concluded that women would not be hired due to their low ability. It was found from past data during the development stage that this program underestimates women, and when there are words related to women, such as "from a women's university," it is found that the ability is calculated to be low. Amazon has given up on formal use of this program.

“In the past, there were few cases of women being promoted to managerial positions, and there was little data. If AI learns such past data, it can judge that women have a low chance of being promoted. bias is included in AI, and that bias is reflected in AI.AI has such a problem, and it will be criticized for reproducing social discrimination." (Fukuoka Attorney) .

There are so many biases in society. If you do business in a field with many biases, naturally AI will also contain many biases. There is also a view that the use of AI in areas with many biases should not be done in the first place.

Attorney Fukuoka points out that “representativeness of data” is important. Since the data that AI learns may contain biases, it is necessary to configure the data in advance so that the AI will show correct results, or correct the data to eliminate the biases contained in the data. Become. Attorney Sakakibara raises the issue that those who are exposed to bias from the AI development stage and data collection stage should also participate so that bias does not occur in the data that AI learns.

Some predictions made by AI can take a long time to prove to be true. Attorney Fukuoka said, "AI will continue to run without correcting data errors, so we need to make efforts to eliminate biases as much as possible during development." No, but the things that are easy to talk about, like the ones that big companies and governments are doing, are likely to be pointed out if there is a problem, and they are more likely to lead to flames."

■ Contrasting privacy cases

From the perspective of privacy, a case was introduced where the ethics of AI were divided.

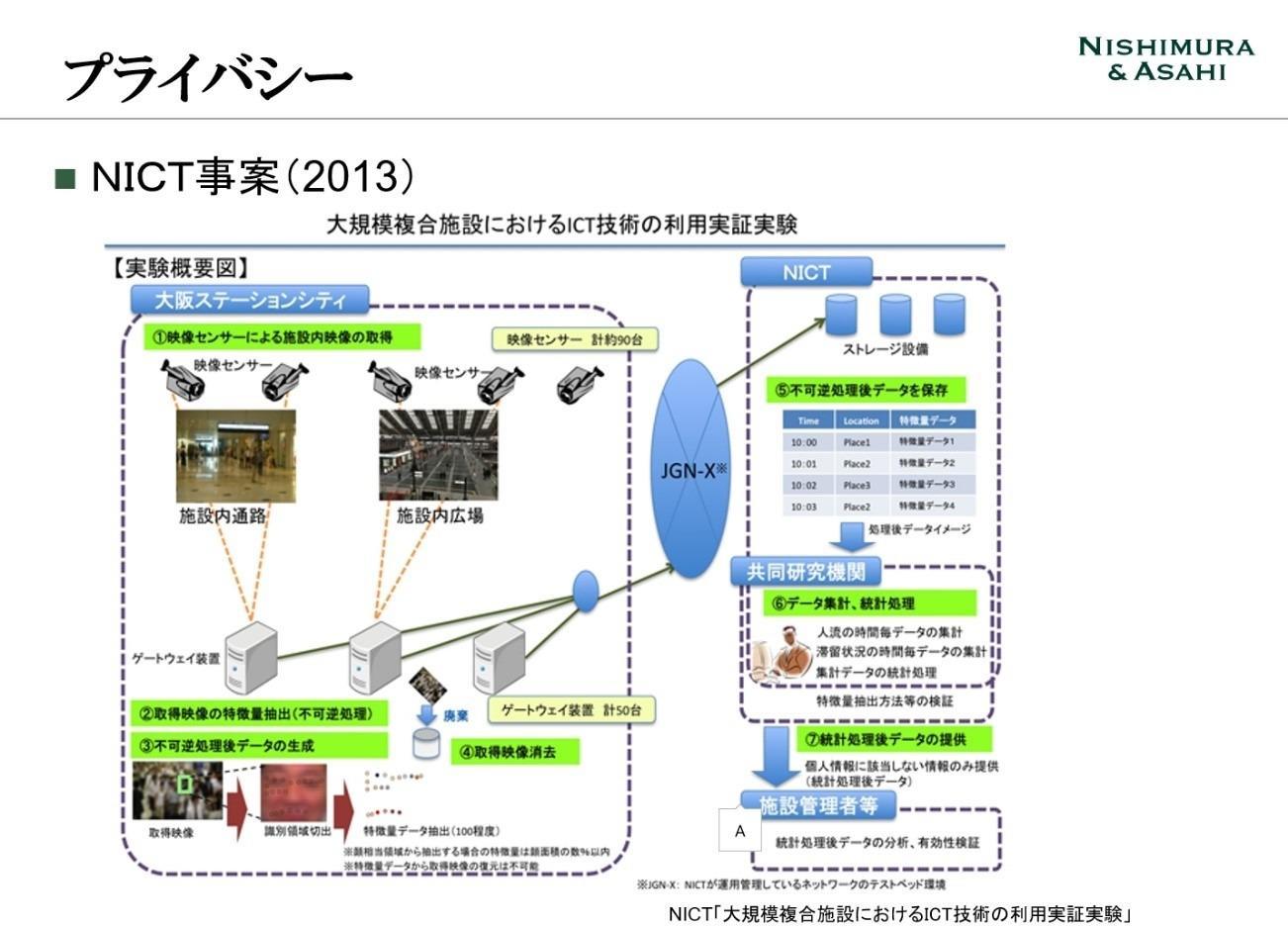

In a 2013 case at the National Institute of Information and Communications Technology (NICT), a demonstration experiment was planned with the aim of using AI to determine the flow of people from facial image data and to help secure appropriate evacuation routes in the event of a disaster. However, many objected and the experiment was stopped. The reason for this is that by taking facial images, it is possible to know who, when, where, and what they were doing, and there was concern that privacy would be violated.

In light of the purpose of disaster countermeasures, there is no need to identify a person from the face image data used in the experiment, and the question is whether it can be said that it does not violate privacy if the person cannot be identified by processing the data. also occurs. During the experiment, it was also explained that the face image data was deleted immediately after processing.

According to Fukuoka Attorney, there is also the idea that when AI analyzes face image data, it extracts the facial feature amount, and this feature amount expresses the individual's characteristics. "Simply deleting facial image data is not enough. People differ in what makes them feel 'unpleasant' about infringing on their privacy."

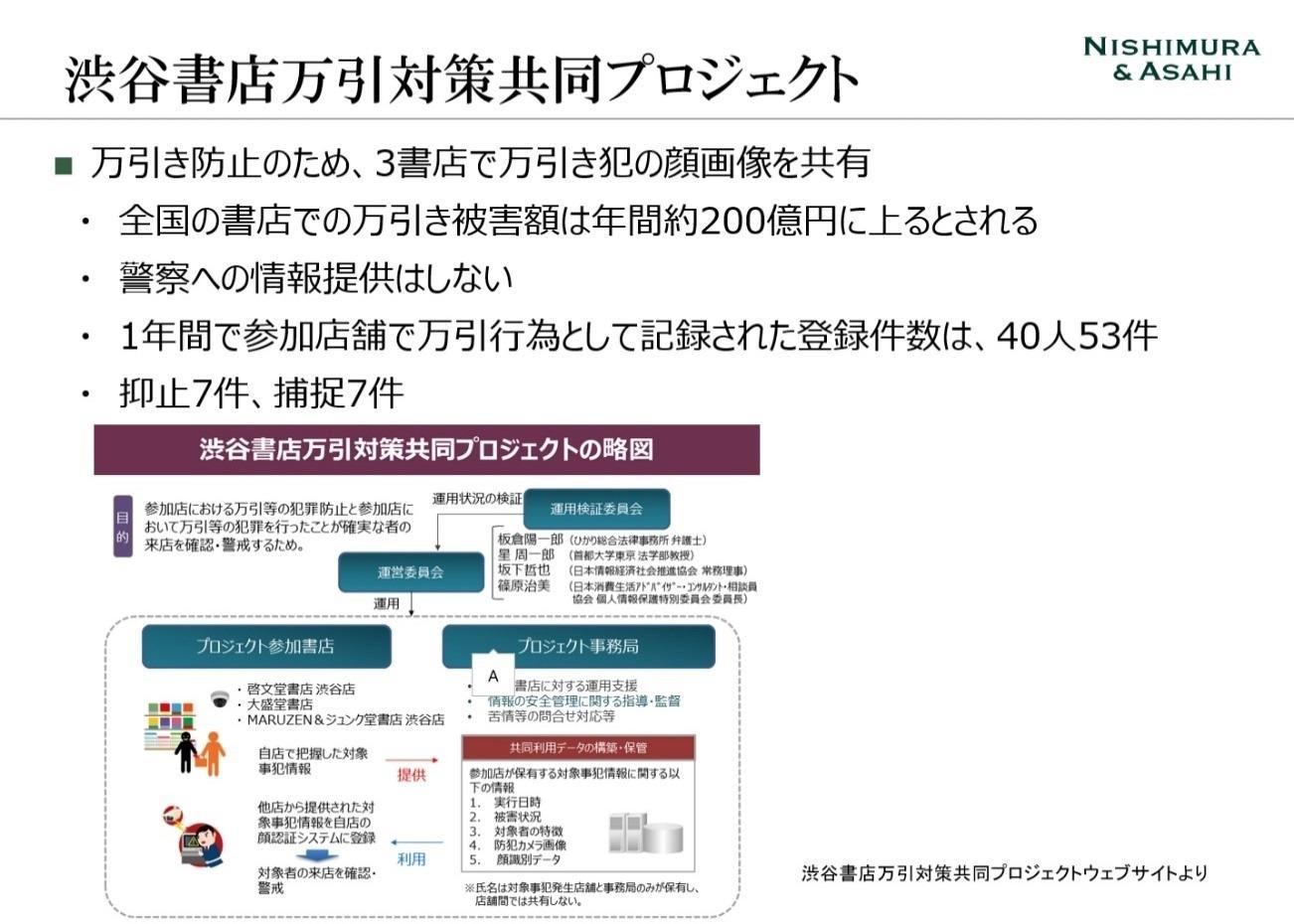

On the other hand, a shoplifting countermeasure project at bookstores in Shibuya, Tokyo is an effort to prevent shoplifting crimes by sharing facial image data of shoplifters among bookstores, but there have been flames due to concerns about privacy violations. not.

Attorney Fukuoka prefaces this by saying, "This is also a dangerous case if you do it wrong," and explains that the fact that the bookstore side carefully disseminates information about this project to the outside prevents the flames.

“Although it is justifiable for the purpose of crime prevention, we invite outside experts to the project to verify whether our efforts are appropriate, and we disseminate such information on our website. I have also made it clear that I will not provide it to anyone,” said a Fukuoka attorney.

Although both the NICT case and the bookstore shoplifting countermeasure project had legitimate purposes, they were divided from the standpoint of privacy. In particular, face-related data is likely to lead to privacy issues, and it would be uncomfortable to have your face recorded and used for something in the first place. "It depends on how you do it. The point is whether you can proceed while gaining the consent of society," says Fukuoka.

From the standpoint of privacy, in order to avoid flames, it is important to properly disseminate information to the surroundings and invite experts to deal with anticipated concerns before starting the initiative. . From the standpoint of corporate legal affairs, companies have had little experience in communicating with society in this way, and corporate legal affairs have rarely taken such proactive actions. It is necessary to take on new challenges such as these in order to avoid a situation in which the business stops due to a fire.

■ Lack of consideration and clash of values

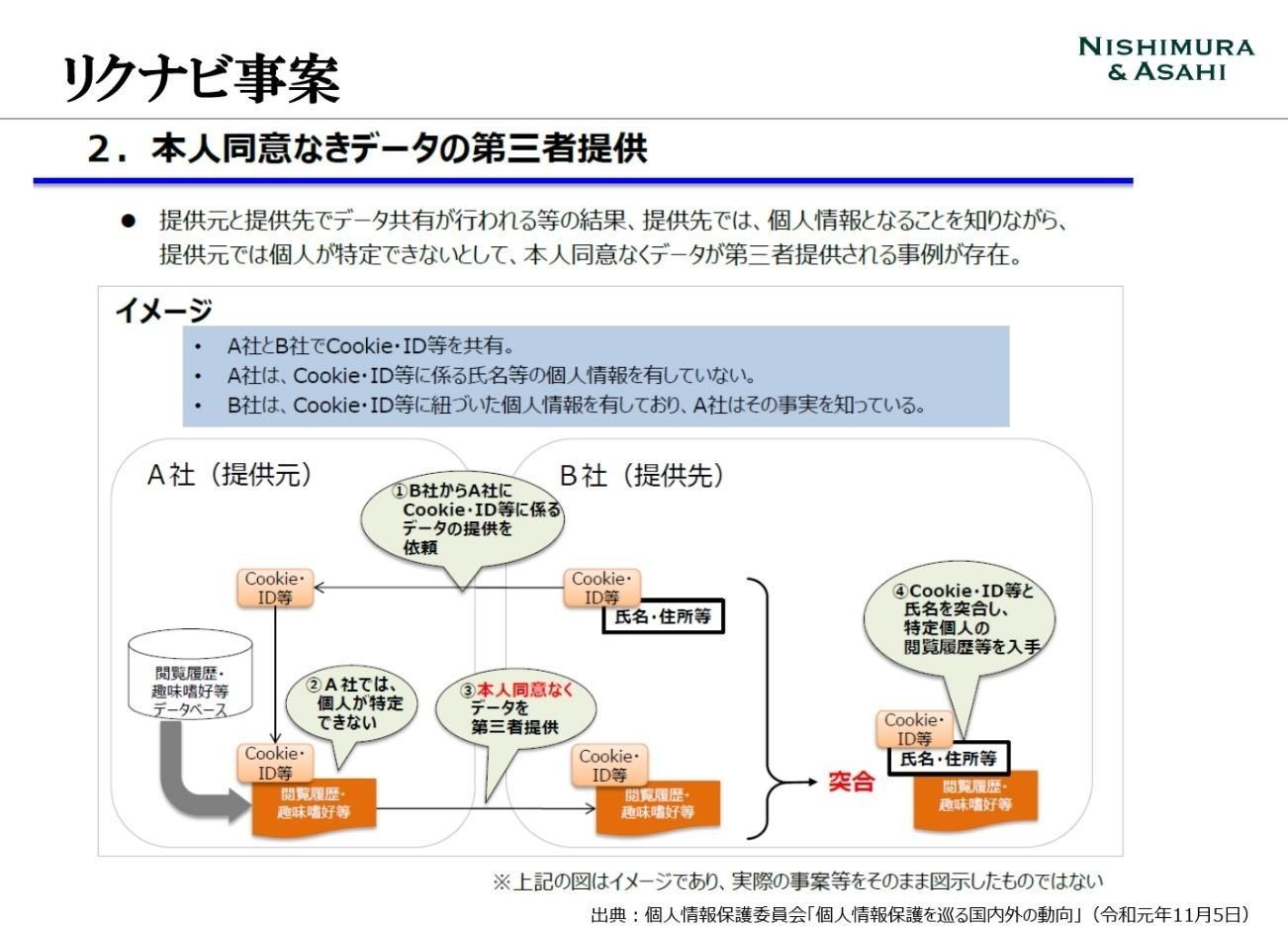

The last two cases are the Rikunabi case, which was set ablaze due to the provision of data to a third party without the consent of the individual, and the bankruptcy map case, which highlighted the clash of different values.

In the Rikunabi case, Rikunabi was criticized for providing cookie information to a recruiting company without obtaining the user's consent. Cookies themselves are not directly linked to personal information, but it was possible for recruiting companies to compare the cookie information provided by Rikunabi with their own applicant information. Rikunabi also provided job offer decline rate data to recruiting companies, and it was suspected that hiring companies were using this information to understand the likelihood that applicants would decline job offers.

“It was explained to the outside world that this was done to prevent job offers from being declined, but if it became known that applicants had declined job offers at other companies, they would be worried that they would not be hired, and as a result, they would face social criticism. We were forced to stop the service because of the shower. Rikunabi probably did not expect that criticism would arise," said Fukuoka.

From the perspective of applicants, it is unclear how such data will be handled, raising suspicions that employers will use it to screen out applicants.

According to Fukuoka Attorney, consent to provision of cookies is not required under the current Personal Information Protection Act, and cookies themselves are not personal information. However, if the individual is identified by matching with other information at the receiving party, the information is effectively provided as personal information.

For this reason, the provider may explain the purpose of use to the user in advance in the terms of use. There are also issues here, such as obtaining consent without detailed description in the terms and conditions, or even if detailed description is given without the user reading it, it may cause criticism or flames later.

実は、インターネット広告業界では、クッキー情報の利用や第三者への提供が昔から日常的に行われており、よく議論されてきました。広告とは違い、就職活動という人生の大きなタイミングで合否に関わる点を考えると、やはり批判を浴びやすい事案だと思います。教育や人事、人命といったことに関わるようなケースでは特段の配慮が不可欠でしょう。

The bankruptcy map case is a case in which a service that plots information about bankrupts listed in official gazettes as public information on Google Maps has been criticized. Even though it is public information, disclosing personal information on the Internet without the consent of the person is illegal in the first place. It was a questionable service.

“The problem is that even public information is visualized by data, and detailed information can be easily found. Information in official gazettes takes time, but if you can easily find it on Google Maps, the degree of infringement of privacy becomes obvious. No. It was a case where problems erupted due to the visualization of data.” (Attorney Fukuoka).

In the United States, there are also examples where the location information of sex offenders is made public. This is aimed at protecting children from approaching sex offenders, but on the other hand, there are voices of doubt that the fact remains visible even after the punishment of sex offenders is finished.

“It is natural for parents to want to know if there is a sex offender in their neighborhood. It is a clash of values between social safety, the possibility of depriving criminals of their opportunity to rehabilitate, and human rights," says Fukuoka.

Here, we have introduced cases that received criticism from society and became a problem. There are various viewpoints that should be noted, but Fukuoka attorney advises that careful consideration and special consideration are essential when deploying AI in areas such as facial images, corporate personnel, and education.

・Compliance with laws and regulations alone is not enough! How to prevent latent problems in data utilization (MET2021 lecture report)

https://www.macnica.co.jp/business/ai/blog/142024/index.html

- AIで人間のバイアスは縮小されるか

https://www.macnica.co.jp/business/ai/blog/141994/index.html - お客様のデータと知的財産の保護

https://www.macnica.co.jp/business/ai/blog/142002/index.html - AIが活用するデータ"は誰のもの?AIビジネスで見落とす法的な観点を紹介

https://www.macnica.co.jp/business/ai/blog/141990/index.html

Macnica offers implementation examples and use cases for various solutions that utilize AI. Please feel free to download materials or contact us using the link below.

▼ Business problem-solving AI service that utilizes the data science resources of 25,000 people worldwide

Click here for details