This article is recommended for those who

Those who are investigating the latest AI trends/fashion

Time needed to finish reading this article

3 minutes

Introduction

Hello! I'm BB from Macnica AI Research Center.

I'm doing this kind of work, but I'm happy and sad when I see the fortune-telling every morning.

Someday, the future may come when AI will seriously predict the condition and behavior of humans.

It may no longer be a fortune-telling but an analysis, but is it possible for people to live like humans without spoiling or being arrogant even with the promise of good and bad days?

Well, this time is the 2nd part of the participation report article of the must-see conference NVIDIA GTC 2019 on the AI trend survey!

This time it's a session / case study that everyone has been waiting for! Due to various circumstances, the image is small.

Digital twins and GANs

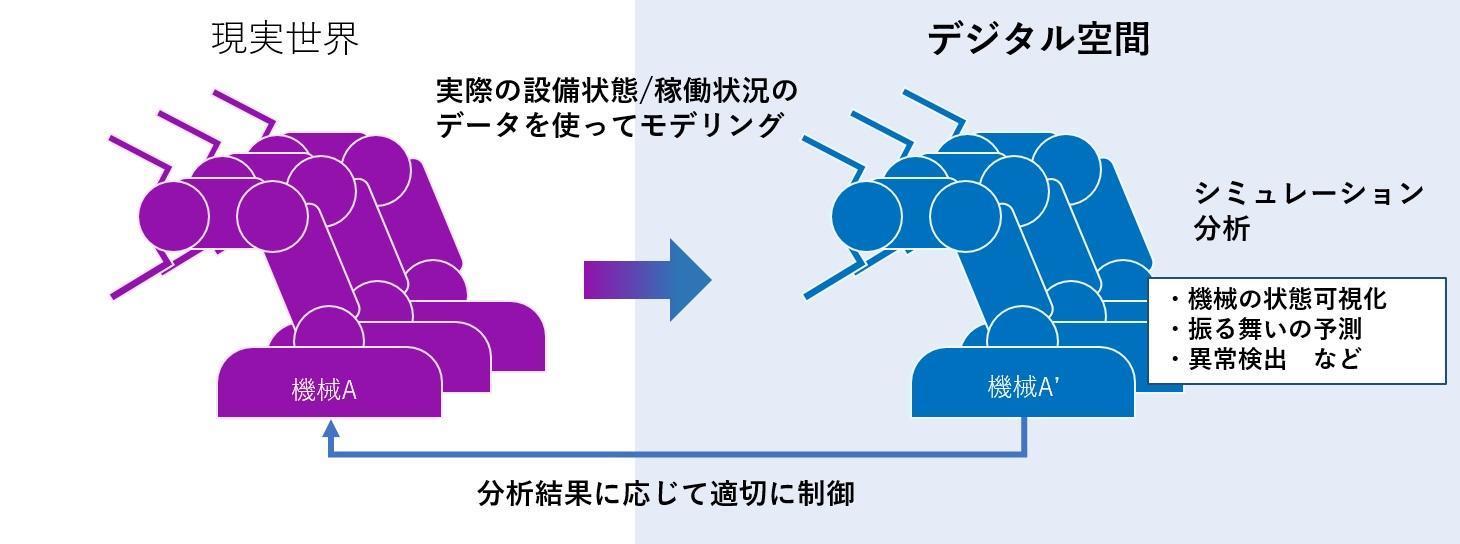

Are you familiar with the term digital twin?

Roughly speaking, it is to build a virtual model in the digital space that behaves in the same way using the data of the machine itself and the process.

With the advent of IoT, it is becoming possible to collect detailed information about things in real time, so digital twins can also be modeled using real data in real time = the accuracy of model simulations is increasing. Collecting.

There was an introduction of efforts to combine GAN with the construction of this digital twin.

With the idea of treating a GAN generator that has learned equipment and process data as a digital twin, the generated data will be used for supervised learning such as anomaly detection.

I think it was an epoch-making example of applying simulation results to other modeling and analysis.

Also, it seems that the Discriminator can be used as an anomaly detector.

Since the GAN used here considers the distribution of the noise model in advance, even if it is trained on a coarse data set that contains unintended noise in a certain label, the generative model will generate data with properties corresponding to the intended label. Produces properly.

Deep learning for architectural design

The combination of BIM (Building Information Modeling) and deep learning is also attracting attention.

BIM is something like process data in architectural design, and is a model that combines supplementary information such as room layout, materials, dimensions, quantity of materials, construction information, and cost to a 3D model of a building.

What was introduced was the automation of architectural design using deep learning.

By using deep learning to train a model that automatically detects incidental information such as floor plans, materials, and room types from BIM, the content detected by the learned model can be used without human confirmation of BIM at the time of inference. We will automatically calculate the ratio of the layout of the building and the position of the window.

BIM geometry, including calculated ratios, can be compiled into 3D models using tools, allowing you to dynamically check whether the design is as intended.

Acceleration of learning/inference (Pruning)

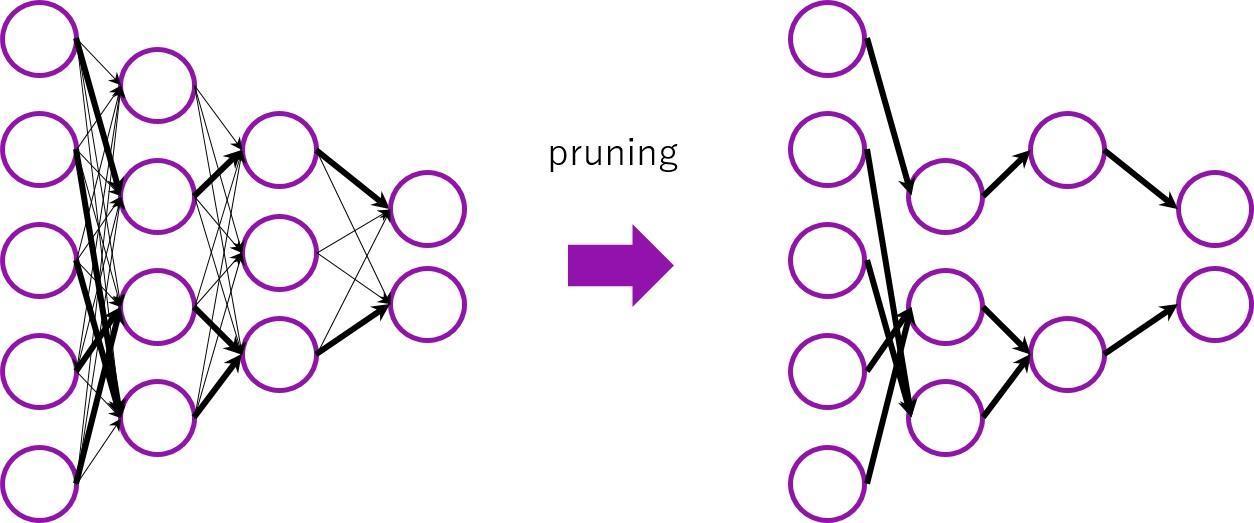

There were many sessions on approaches to speed up both learning and inference while maintaining accuracy, and the term "sparse" neural network was mentioned here and there.

Sparseness refers to sparseness (sparseness, many 0s in model parameters).

The fact that the neural network is sparse means that unimportant parameters are set to 0 and calculations are not performed.

"Pruning" was often introduced as a learning technique for thinning out unimportant parameters.

A method was introduced to reduce the parameters to be updated by setting the connection weights (there is also a node method) that have only values below the threshold (values close to 0) to 0, but how to pruning It seems that various methods have been proposed.

In addition, deep learning is good at imaging systems, so many sessions on appearance inspection were also popular.

By applying semantic segmentation to the inspection process, we introduce an approach to identify abnormal locations more precisely in the form of verification of DAGM (normal/abnormal data set of industrial product images) using U-Net. There was something

When we performed accuracy verification to gradually change the threshold for AI judgment, at a certain threshold, we got a pretty good value of Precision | Recall = 99% | 96% and attracted attention.

This time, we introduced the session centered on case studies!

final episode is a bit of a rush.

The We will introduce the library group "RAPIDS" for making full use of GPUs in machine learning and preprocessing, and the relationship between modeling such as machine learning / deep learning and data, so please look forward to it!

In addition, you can purchase the Jetson Nano development kit introduced in the first time from the link below.

You can also get detailed information on other NVIDIA GPU products, so please take a look!