まずはじめに、皆さま「CUDA」とは何かご存知でしょうか。CUDA(Compute Unified Device Architecture)は、NVIDIA社が開発したGPUプログラム開発環境です。CUDAを使用するとC言語のようなプログラム記述を使用して、GPUの複数の演算器を利用した高速な並列演算処理をおこなうことができるようになります。

この並列コンピューティング技術は近年AI分野で注目を集めているディープラーニングによる物体検出など、以下のような様々な応用に活用されています。本連載ではCUDAを使用したプログラミング事例の紹介を通して、GPUを使用した並列演算処理の仕組みについてご紹介したいと思います。

第1話 GPU Computing・並列演算処理の仕組み

第2話 CUDAプログラムの構造

第3話 CUDAプログラム実行リソースの可視化

第4話 CUDAの実行モデル

第5話 pythonを使用したCUDAプログラミング

GPUとは?

GPUはGraphics Processing Unitの略で、3Dグラフィックスなどの画像描写をおこなうための演算処理をおこなうプロセッサーのことです。3Dグラフィックスでは、各物体を複数の平面の板(ポリゴン)の組み合わせで表現しています。例えば、以下の例ではリンゴを三角形のポリゴンの集合で構成しています。(このポリゴンの粒度を細かくすれば、より滑らかな3Dモデルとして表示できます。)

3Dグラフィックスでは、各物体を構成するポリゴン座標の変換・回転等のために膨大な量の行列演算処理を高速におこなって次々に画像を作り出しており、GPUはこの行列演算を同時に並列実行することができるように大量の演算器を備えたアーキテクチャーとなっております。

このように、GPUは膨大な演算を同時に実行できる仕組みを備えていることから、グラフィック処理だけでなくGPU Computingとして、AIや科学技術計算等の様々な領域でHPC(High-performance computing)技術としても注目されています。

GPU Computingの応用例

GPU Computingは様々な分野で使用されていますが、その応用例の一つにAI処理(ディープラニング)があります。

ディープラーニングは、人間の知的なふるまいをコンピューターにおこなわせるディープニューラルネットワークを使用したAI技術のひとつで、以下のような様々な応用例が研究されています。

・画像認識

・物体検出

・自然言語処理 など

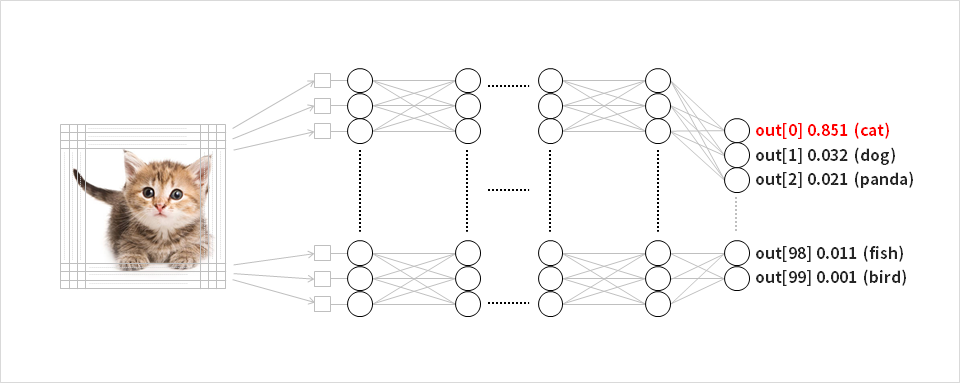

例えば画像認識の処理では、入力された画像データをニューラルネットワークに入力し、その演算結果の出力から、入力した画像を分類しています。(参考リンク)

具体的には、入力画像の画素データ列をネットワークに入力し、各ノードで行列演算をおこなった計算結果を確率として出力するような仕組みが知られています。

例えば、以下のように猫の画像データ列を入力し、出力ノードの中で最も確率が高いout[0]に割り当てられた”cat”であると判定することができます。

ディープラーニングの分野ではこのネットワーク階層を増やすことで性能を向上させる方法が知られていますが、層数を増やすことに伴って、ネットワークのノードでの演算量が増大してしまうことが課題となっていました。ニューラルネットワークには依存関係のない膨大なノードの行列演算が存在していることから、大量の演算器を使用した並列演算処理を実行できる仕組みを備えるGPU技術への期待が高まっています。

こちらのリンク記載事例のようにディープラーニング領域ではフレームワークや開発ツールが充実してきており、CUDAのコーディング部分を直接記述する必要性がなくなってきています。本連載ではCUDAのコーディング部分を直接確認できるNVIDIA社から公開されているシンプルなサンプルコードの事例を使用し、GPUプログラミングの仕組みを紹介したいと思います。

サンプルコードの実行

では、まず実際にサンプルコードを実行してみましょう。

CUDAを使用したサンプルコードに関する情報はこちらのリンクに記載の通り、Windows、Linuxの両方で準備があるようです。

今回はJetson Xavier NX Developer Kitを使用してLinux版のデモを動作させる方法を紹介します。

こちらのリンクの手順を参考に起動用のSDカードを作成し、Jetsonを起動します。

(今回はJetPack4.5.1を使用した実行例のご紹介となっています。)

起動用のSDカードには既にCUDAがインストールされていますので、

実行したいサンプルコードのフォルダーでビルドをおこない、実行させることができます。

以下にいくつかの実行例をご紹介します。

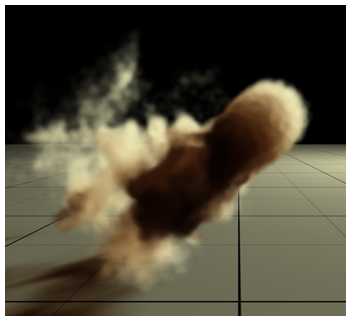

実行例1 (smokeParticles)

$ cp -r /usr/local/cuda-10.2/samples/ ./cuda_samples

$ cd ~/cuda_samples/5_Simulations/smokeParticles

$ make

$ ./smokeParticles

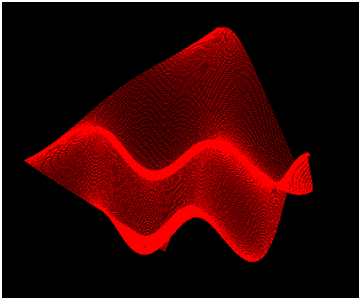

実行例2 (simpleGL)

$ cd ~/cuda_samples/2_Graphics/simpleGL

$ make

$ ./simpleGL

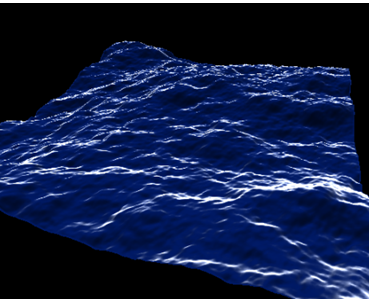

実行例3 (oceanFFT)

$ cd ~/cuda_samples/5_Simulations/oceanFFT

$ make

$ ./oceanFFT

実行例4 (postProcessGL)

$ cd ~/cuda_samples/3_Imaging/postProcessGL

$ make

$ ./postProcessGL

次回、サンプルコードの中身について解説

本記事では、GPU Computingや並列演算処理の仕組み、さらにCUDAのサンプルコードを動作させる方法までの流れをご紹介しましたがいかがでしたでしょうか。

次回はサンプルコードのプログラム記述の解説を通して、CUDAを使用したプログラミングの仕組みについてご紹介します。ボタンをクリックすると簡単なフォーム入力画面へと遷移します。入力完了後に2話以降をご覧いただけるページURLがメールで通知されます。