このブログについて

Lattice製品のFPGA技術サポート担当として早15年近く働く私ですが、

Lattice社が突如発表したAIソリューションのサポートを担当することになってしまいました。

もちろんこれまでAIに関する仕事は全く経験が無く、何とか業務をこなせるようになるために0から勉強中です。なかなか0から1にならないのですが…。

このブログではそんな私がFPGAへAI機能の実装をするその過程を共有させていただき、今後検討される方の道しるべになれればと思っています。

組み込みAI開発なんて本当にできるのか…。

Lattice社のAIソリューションはAIの中でもディープラーニング(深層学習)の仕組みを利用したもののようでして、

AI初心者の私には、なんのこっちゃ?という感じ。

突然AIソリューションのサポートをやってくれと言われたものの、私自身いろいろな面で懸念がありました。

まず1点目。

普通AIといえば、ハイスペックなCPUやGPUを利用し、ガンガンに電力を食いながらものすごく難解な演算処理をするイメージです。

一方、組み込みの世界はいろいろなリソースが非常に限られているケースが多く、電力もしかりです。

組み込みの世界でAIを使うなんて本当に成り立つのか?という懸念があります。

2点目ですが、

AI開発に関してググってみると、大体Linux OSを使ってPython言語でなんか難しそうなことをコーディングしていくようなことが出てきます。

Tensorflowとかnumpyとか…。かつ、それを自分のやりたいことに合わせてカスタマイズしていく必要もありそうで…。

そんなことはやはりAIを専門に取り組んでいる設計会社に頼まないとできないんじゃないかという懸念が…。

ちなみに私はVerilogやC言語はある程度は読めますが、Pythonはあまりよく分かりません。

またLinuxを使った開発も少しかじったことがある程度です。

3点目としては、

ディープラーニングの仕組みを利用するには、ニューラルネットワークモデルのトレーニング(学習)というものが必要になるようです。

ニューラルネットワークモデル(略してNNモデル)が人の脳のようにいろいろな判断をしてくれるようなのですが、

このNNモデルの性能を高めるためには、教師データと呼ばれる学習用のデータを用意し、これをNNモデルに与えてあげて学習をさせる必要があるようなのです。

しかし、例えば画像認識などの場合、用意する教師画像データは数千枚とか数万枚とかのレベルのようで、そんなの自分で用意するのなんて無理です!

他にも言い出したらキリが無いですが、ちょっとホントに組み込みAI開発なんてできるのか…。心配は尽きません。

そんな私ですが、今回下記のように人の上半身を検出して緑枠で囲い込むような処理を組み込むところまで実際にやってみましたので、

その過程を記載していこうと思います。

なんかいろいろとリファレンスデザインが提供されているらしい。

1から自分ですべてを開発していくことは到底できそうにもないので、まずは開発のベースとなるデザインを探します。

ソリューションの提供にあたり、Lattice社からは無償のリファレンスデザインの提供があるとのことで、内容を確認してみることにしました。

Lattice社のサイトに行ってみると、確かにいろいろとAIのリファレンスデザインが提供されているようです。

このリファレンスデザインを利用して実装を検討してみようと思います。

題材としては、Object Counting(人の人数カウント)のリファレンスデザインにすることにしました。

Lattice社のVoice & Vision Machine Learning Board(略してVVML Board)という評価ボードを利用し、

CrossLink-NXというFPGAに物体検出(人検出)の機能を実装していきたいと思います。

この評価ボード(VVML Board)にはHimax社製のイメージセンサー(HM0360)を搭載しており、FPGAと接続されています。

FPGAではこのセンサーからリアルタイムに入力される映像データを利用して、人が写っているかどうかをAI処理で判断し、

検出した人の周りに四角い枠を描画するところまで処理をさせる形になっています。

人検出動作を確認してみた

早速進めたいところですが、リファレンスデザインのユーザーガイドを見たところ、78ページもあります…。

CrossLink-NX Object Counting Reference Design ユーザーガイド

CrossLink-NX Object Counting Reference Design プロジェクトファイル

最初から心が折れそうになっていたのですが、

リファレンスデザインだけではなく、すでにできあがっているデモファイルもあることを発見しました。

いきなりリファレンスデザインを細かく見ていくのは大変なので、このリファレンスデザインのデモファイルを使って、

先に最終的な動作を見てみて、実装のモチベーションを上げたいと思います。(注:もちろんデモファイルも無償です)

デモの確認は下図の通りで、提供されているファイルを評価ボードに書き込んで、

後はフリーのキャプチャーツールで画像を見るだけなので非常に簡単に確認できます。

1.デモファイルのダウンロード

早速デモファイルをダウンロードしてみました。

デモファイルはzipファイル形式で配布されており、zipを回答したところ、

・ Lattice社のFPGA設計ツール"Radiant"を使って生成したFPGAのコンフィグレーションファイル

(CrossLink-NX-Human-Counting-Bitstream.bit)

・ AIのニューラルネットワークモデルの内容が含まれるファイル

(crosslink-mv2-dual-core-8k.mcs)

の2つが含まれていました。

2. デモファイルをボードへ書き込み

デモのユーザーガイドも提供されていました。

これら2つのファイルをRadiantに付属している書き込みツール"Radiant Programmer"を利用して、VVML Board上のSPI Flashに書き込むことでデモの動作が確認できるようです。

デモのユーザーガイドはこちら

FPGAの書き込みツール書き込みツール"Radiant Programmer"はこちら

VVML Boardとパソコンをmicro USB Type-Bケーブルで接続します。

VVML Boardにはmicro USB Type-Bコネクタ(J2)と、それよりも大きいmicro USB Type-B SuperSpeedコネクタ(J8)の2つのUSBコネクターがありますが、

書き込みの際はJ2の方を使います。ケーブルを接続するとパソコンからボードに電源が供給されます。

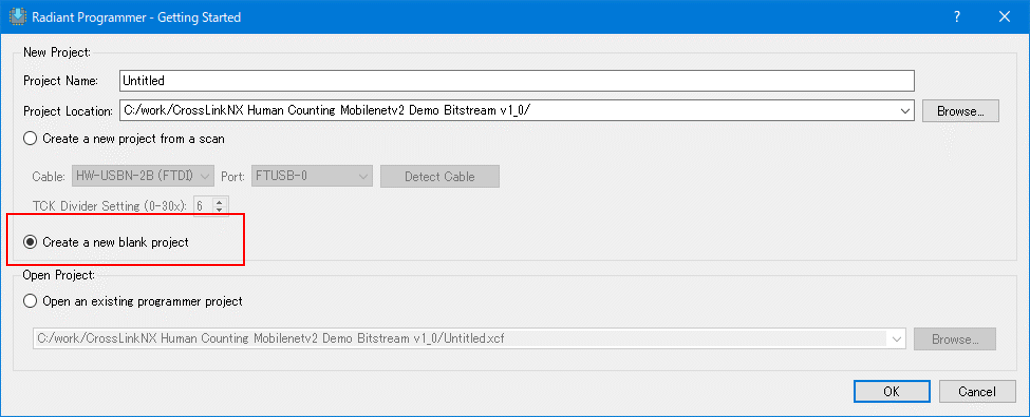

WindowsのスタートからRadiant Programmerを起動します。

「Create a new blank project」を選択してOKを押します

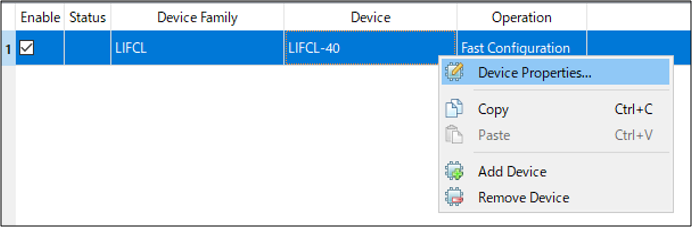

Programmerのメイン画面が起動したら、Device Familyの欄から「LIFCL」(=FPGAの型番)を選択します。

VVML BoardにはCrossLink-NXの40k Logic Cellのデバイスが搭載されていますので、Device欄から「LIFCL-40」を選択します。

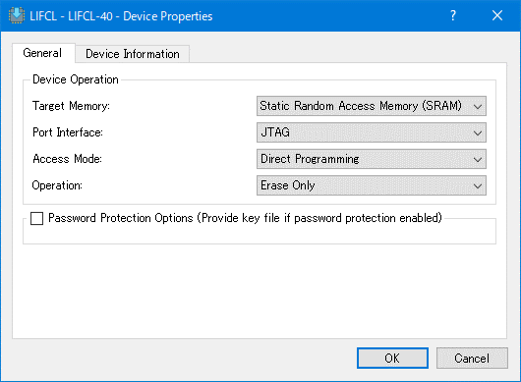

書込みの前に一度FPGAのSRAM領域のEraseを行います。

右クリックをし、「Device Properties」を選択してDevice Propertiesウィンドウを開きます。

SRAM領域のEraseを行うために、以下のように設定してOKをクリックします。

ツールバーのProgramボタンを押してEraseを実行します。

Eraseが完了すると画面下部のログウィンドウに"Operation: successful"と表示されます。

次に、コンフィグデータとネットワークモデルのファイルの書込みをおこないます。

それぞれを指定されたアドレスに書き込みますが、まずはFPGAのコンフィグレーションデータ(CrossLink-NX-Human-Counting-Bitstream.bit)を書き込みます。

同様にDevice Propertiesウィンドウを開き、下図のように設定をおこないます。

FPGAのコンフィグレーションデータはアドレス0x00000000~0x00100000に書き込みます。

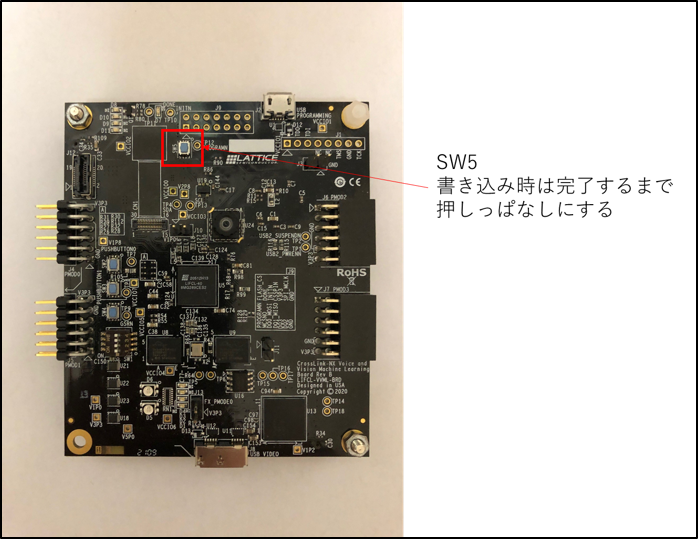

ツールバーの"Program"ボタンをクリックして書き込みをおこないます。

この際、注意事項として、Programボタンをクリックする前に、基板上のスイッチSW5を押した状態でProgramボタンをクリックし、

書き込みが終了するまでSW5を押しっぱなしにする必要があります。

(デモのユーザーガイドにもその旨の記載がありました)

コンフィグデータの書込みが終わったら、

同様に、ネットワークモデルのデータ(crosslink-mv2-dual-core-8k.mcs)をアドレス0x00300000~0x00400000に書き込みます。

この際もSW5を押す必要があります。

3. デモの実行 「人検出できた!」

両方のデータの書き込みが完了したら、一度micro USBケーブルを抜き、

今度はmicro USB Type-B SuperSpeedコネクタ(J8)の方とパソコンを接続します。

FPGAから出力された画像はボード上のUSBマイコン(Cypress FX3)を経由してUSBでパソコンの方へ送信されますので、

これをキャプチャーツールで画像確認します。

ケーブルで接続すると、WindowsのデバイスマネージャーでカメラとしてFX3が認識されます。

フリーのキャプチャーツールとしてAMCAPを利用してみます。(※Window10に付属しているカメラアプリでも代用可能のようです)

AMCAPをインストールしてツールを起動後、DevicesメニューからFX3を選択するとデモ動作の確認ができます。

人の顔のあたりが緑の四角い枠でトラッキングされ、画面左下には検出した人の数が数値で表示されます。

記事の冒頭でもお見せしましたが、結構なスピードでトラッキングできていますよね!なかなか面白いです。

消費電力を確認してみた

Lattice社は自らが提供するAIソリューションは非常に低消費電力で小型であり、

エッジコンピューティングに最適なAIソリューションであることを謳っています。

実際に海外のノートパソコンでこのAIソリューションが採用され、人の有無を検出してバッテリ利用効率を高めたり、

セキュリティ目的のために、ユーザーの後方からの人ののぞき込みを検出する機能として利用されています。

ノートパソコンに実装できるくらいの低消費電力なソリューションだということですね。

ちなみに今回のデザインの場合はどれくらいの消費電力なのか、計測してみようと思います。

ただ、実際に評価ボードを利用して電力を計測してみようと思って回路図などを確認したのですが、

残念ながら直接計測するのはちょっと難しそうでしたので、

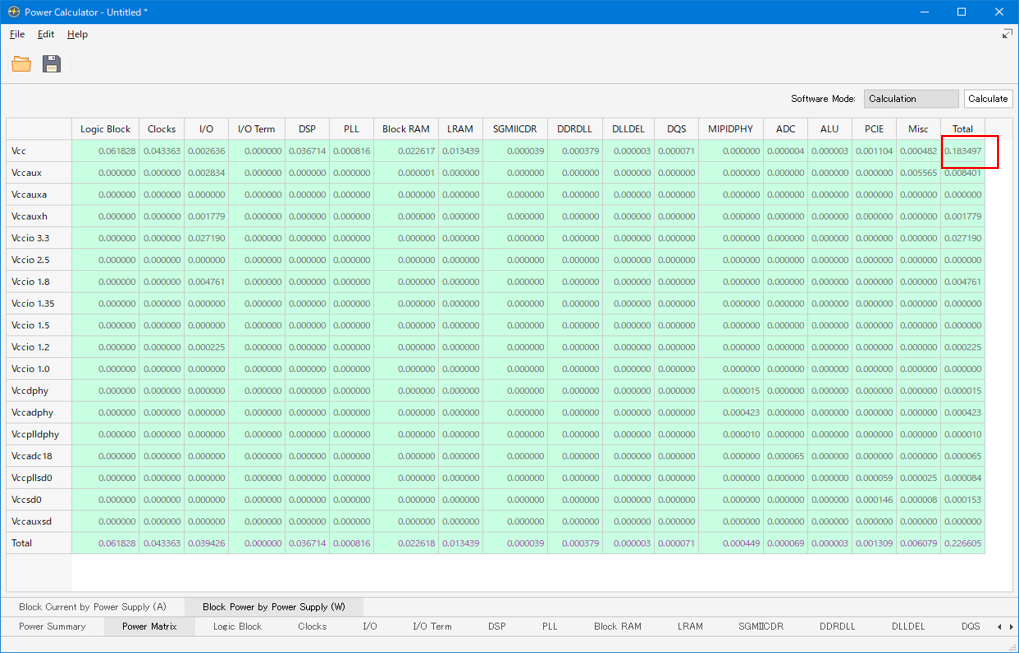

Lattice社のFPGA設計ツール"Radiant"に付属している消費電力見積ツール"Power Calculator"を利用して、

実装するデザインの消費電力を見積もってみました。

リファレンスデザインのプロジェクトをRadiantで読み込んだ後、配置配線まで実行し、

Power Calculatorを起動して各電源ラインごとの消費電力見積を確認してみます。

CrossLink-NXのコア電源(VCC)を見てみますと…

なんと約200mW!

トータルの消費電力で見ても約230mW程度。(周囲温度25℃、AF率20%で見積り)

Raspberry Pi Pico やArduino Nanoなどのようなシングルボードマイコンと同じようなレベルの消費電力です。

Lattice社からは、このデザインでは224x224 pixelの画像を使ってAIの処理をおこなっており、

約10フレーム/秒のスピードで処理ができると発表されています。

電力的に同じくらいのシングルボードマイコンで考えた時に、

これだけのスピードで画像を用いたAI処理ができるものは今のところ私は聞いたことがありません。

さらにLattice社のサイトでCrossLink-NXの他のリファレンスデザインを見てみると、

QVGAやVGAなどのもっと大きな画像でもさらに高速な処理ができるという発表も見受けられます。

確かに非常に面白く尖ったソリューションのようです。

まさにエッジAIの中でもさらにエッジ側の分野を示す"エンドポイントAI"に該当するソリューションだと思います。

バッテリー駆動するモバイル製品や、IoT関連製品で活用ができそうな気配がプンプンしてきました。

やはりこれをうまく使いこなしてみたいです。

次回からどうやって開発を進めて行けばいいのか、具体的に見ていきたいと思います。

お問い合わせ

評価ボードやサンプルデザインに関する不明点や、本ブログで扱ってほしい内容などありましたらお気軽にお問い合わせください!